Appearance

在线数据库(可以玩的):https://try.redis.io/

Redis什么?

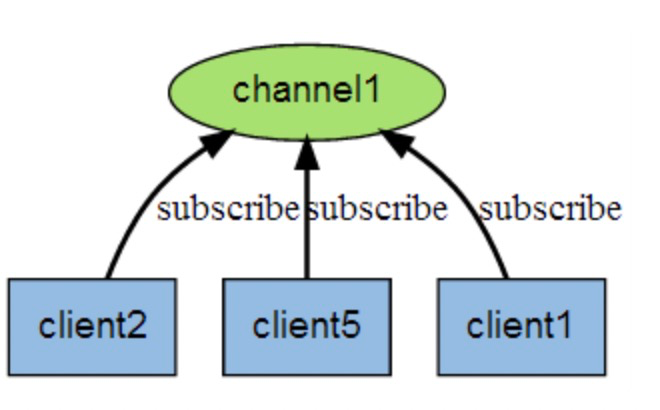

Redis 是一种开源(BSD 许可)、内存中数据结构存储,用作数据库、缓存和消息代理。Redis 提供了诸如字符串、散列、列表、集合、带范围查询的排序集合、位图、超级日志、地理空间索引和流等数据结构。Redis 内置复制、Lua 脚本、LRU 驱逐、事务和不同级别的磁盘持久化,并通过 Redis Sentinel 和 Redis Cluster 自动分区提供高可用性。

安装:docker(用于学习,生产尽量别用)

docker run -d --name redis -p 6379:6379 redis:latest redis-server --appendonly yes --requirepass "123456"

查看运行的容器:

docker ps

连接redis:

docker exec -ti 95b40 redis-cli 连接

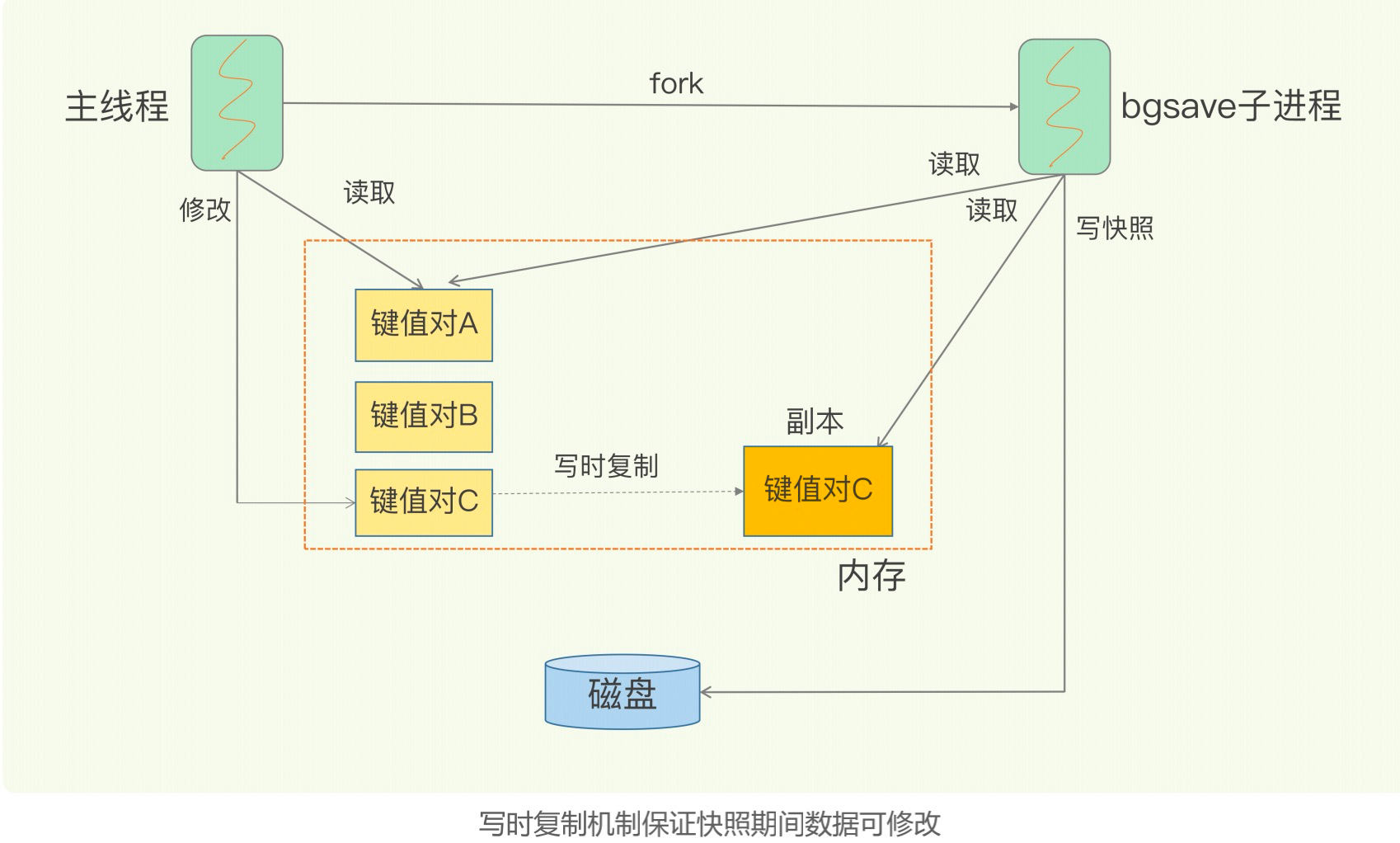

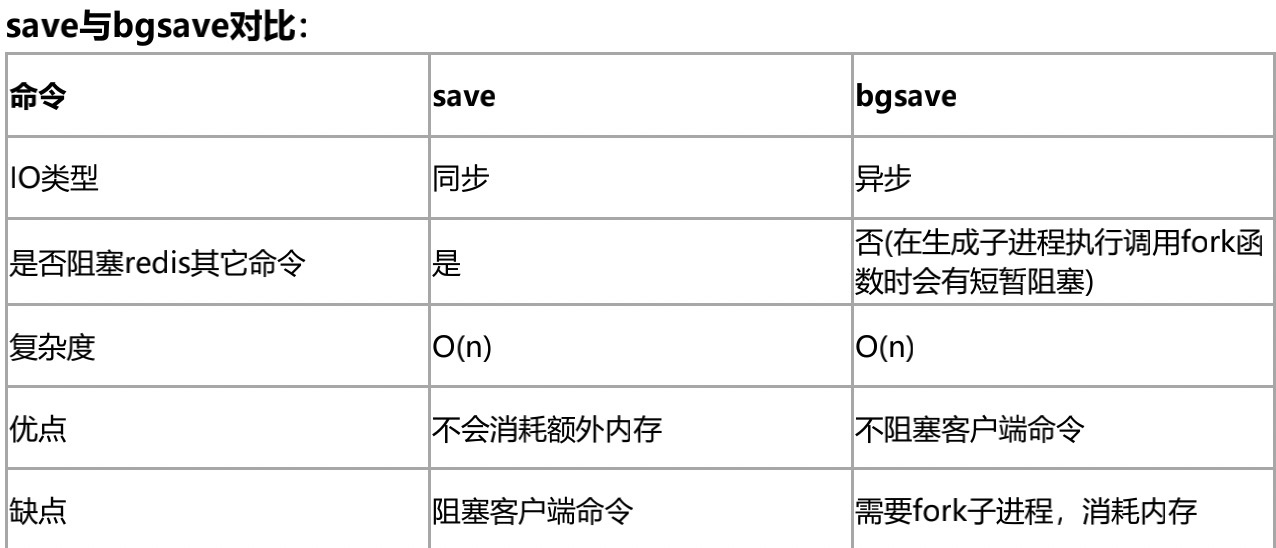

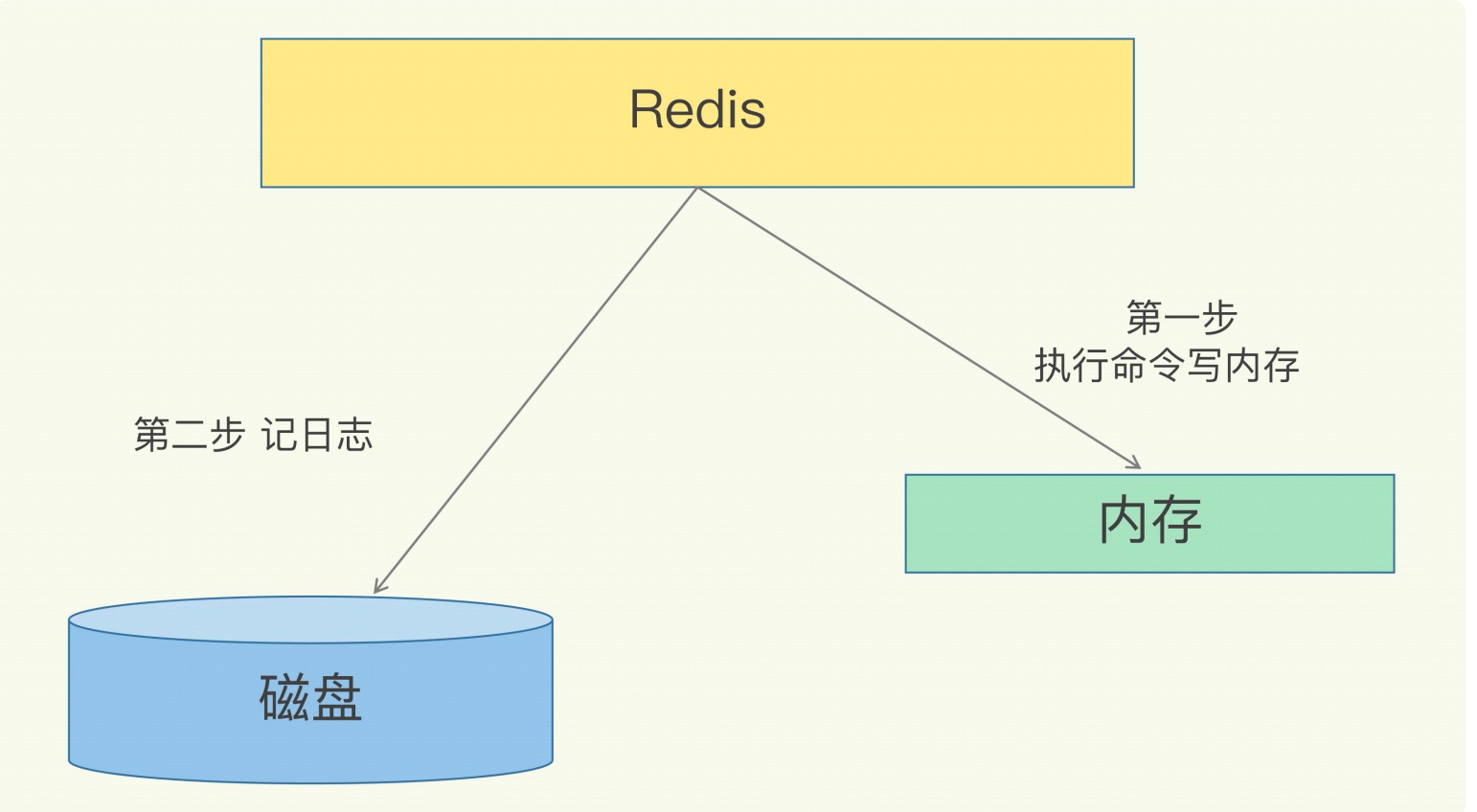

auth 123456 输入密码1.1、问题:,为了保证数据的可靠性,Redis 需要在磁盘上读写 AOF 和 RDB,但在高并发场景里,这就会直接带来两个新问题:一个是写 AOF 和RDB 会造成 Redis 性能抖动,另一个是 Redis 集群数据同步和实例恢复时,读 RDB 比较慢,限制了同步和恢复速度。

解决方案:

一个可行的解决方案就是使用非易失内存 NVM,因为它既能保证高速的读写,又能快速持久化数据!!

1.1.1、NVM是什么?

NVM特性 NonVolatile Memory,非易失存储器,具有非易失、按字节存取、存储密度高、低能耗、读写性能接近DRAM,但读写速度不对称,读远快于写,寿命有限(需要像ssd一样考虑磨损均衡)。当电流关掉后,所存储的数据不会消失的计算机存储器。没有数据寻道,这点和ssd比较像。

常见NVM:

- 相变存储器(PCM,phase change memory)

- 磁阻式存储器(MRAM,Magnetoresistive RAM)

- 电阻式/阻变存储器(RRAM,resistive ram)

- 铁电存储器(FeRAM, Ferroelectric RAM)

- 赛道存储器(Racetrack Memory)

- 石墨烯存储器(Graphene Memory)

- 忆阻器(Memristor,也是一种RRAM)

未来NVM可能的应用场景

- 完全取代内存(DRAM): 这种做法虽然快,但带来了安全问题,很容易被野指针修改NVM上的数据,因为毕竟是内存;除此之外还得考虑往NVM上写的顺序性(因为系统会对访问内存的指令做优化,因此执行顺序可能会乱序);cache与NVM一致性的问题,怎么防止断电时cache上的新数据没有写入NVM上;同时这种模型对原有的内存模型带来巨大变化,需要我们对操作系统做出适当修改,兼容性不好。

- 与DRAM混合使用构成新的内存系统: 基本和上面情况类似,甚至更复杂,但更贴合实际,因为感觉NVM就算有成型的商业化产品,价格肯定也会很昂贵,再加上还有使用寿命。因此大规模使用还是不太现实。

- 作为块设备(外设)使用(类似ssd): 这种做法能有很好的兼容性,因为我们把它当做块设备来用,只要编写好它的驱动就能使用它了,并不用修改操作系统。但缺点是毕竟是外设,如果挂在io总线上的话读写速度还是很慢的。

- 作为cache使用 除了掉电数据还在,但NVM的速度远低于原有cache的速度。

现有的高性能键值数据库:HiKV

1.2、四大坑

1、CPU 使用上的“坑”,例如数据结构的复杂度、跨 CPU 核的访问;

2、内存使用上的“坑”,例如主从同步和 AOF 的内存竞争;

3、存储持久化上的“坑”,例如在 SSD 上做快照的性能抖动;

4、网络通信上的“坑”,例如多实例时的异常网络丢包。

1.3、全景图->两大维度,三大主线

“两大维度”就是指系统维度和应用维度,“三大主线”也就是指高性能、高可靠和高可扩展(可以简称为“三高”)。

在应用维度上: “应用场景驱动”和“典型案例驱动”,一个是“面”的梳理,一个是“点”的掌握。

高性能主线:包括线程模型、数据结构、持久化、网络框架;

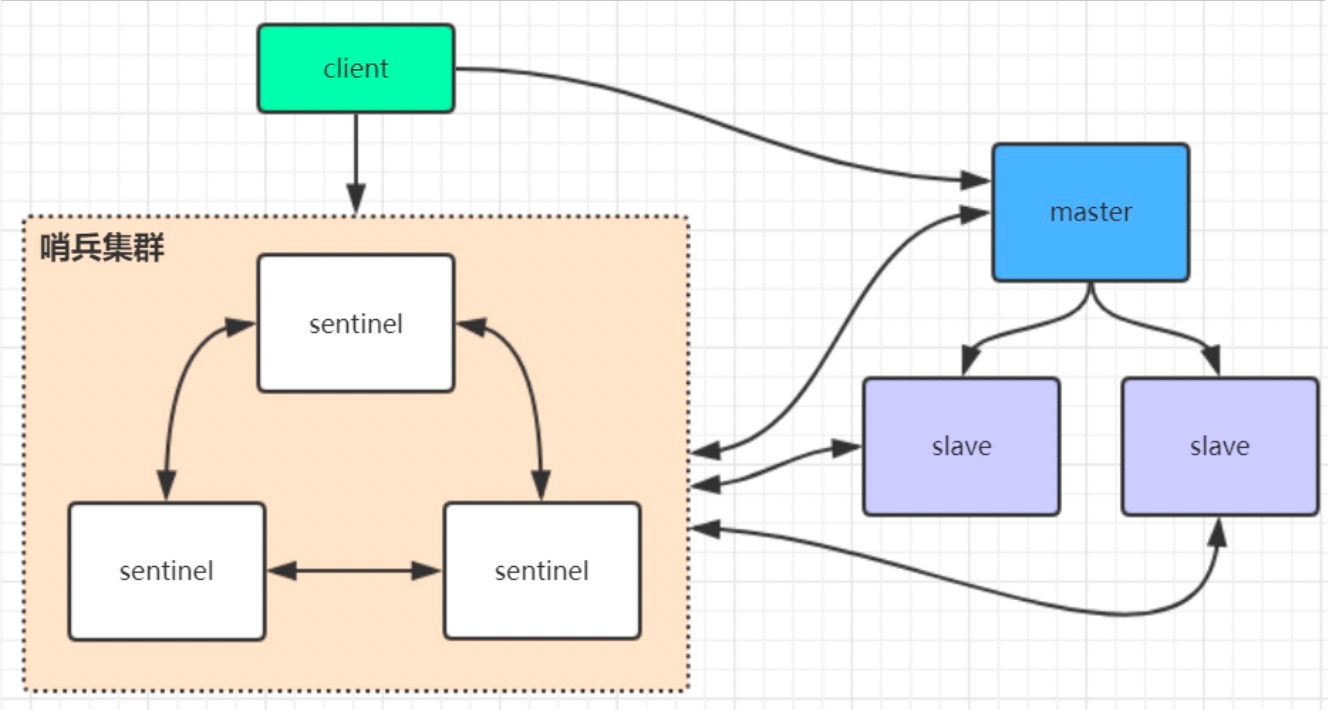

高可靠主线:包括主从复制、哨兵机制;

高可扩展主线:包括数据分片、负载均衡。

1.4、redis问题画像图

1.2测试性能

redis-benchmark

==== SET ======

100000 requests completed in 1.11 seconds 对我们的10万个数据进行写入测试

50 parallel clients 50个并发客户端

3 bytes payload 每次写入3个字节

keep alive: 1 只有一台服务器来处理请求,单击

98.00% <= 1 milliseconds

99.76% <= 2 milliseconds

99.97% <= 3 milliseconds

100.00% <= 3 milliseconds 所有的请求在3ms内处理完成

90009.01 requests per second 美每秒处理90009.01次请求

====== GET ======

100000 requests completed in 1.11 seconds

50 parallel clients

3 bytes payload

keep alive: 1

97.81% <= 1 milliseconds

99.82% <= 2 milliseconds

99.97% <= 3 milliseconds

100.00% <= 3 milliseconds

90252.70 requests per second

====== INCR ======

100000 requests completed in 1.04 seconds

50 parallel clients

3 bytes payload

keep alive: 1

98.81% <= 1 milliseconds

99.97% <= 2 milliseconds

100.00% <= 2 milliseconds

96339.12 requests per second查看redis支持的最大连接数,在redis.conf文件中可修改,# maxclients 10000

127.0.0.1:6379> CONFIG GET maxclients

- "maxclients"

- "10000"

1.3、基础知识

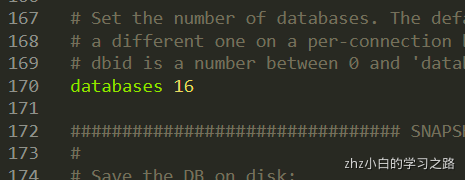

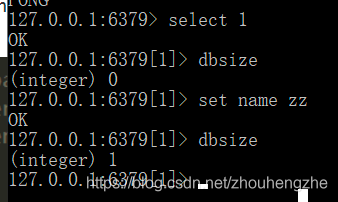

redis有16 个数据库,默认是0数据库

java

select 1 切换数据库

dbsize 查看DB大小

java

keys * 查询数据库所有的key

flushdb 清除当前数据库

flushall 清除全部数据库的内容1.4、redis为什么是单线程但是那么快呢?

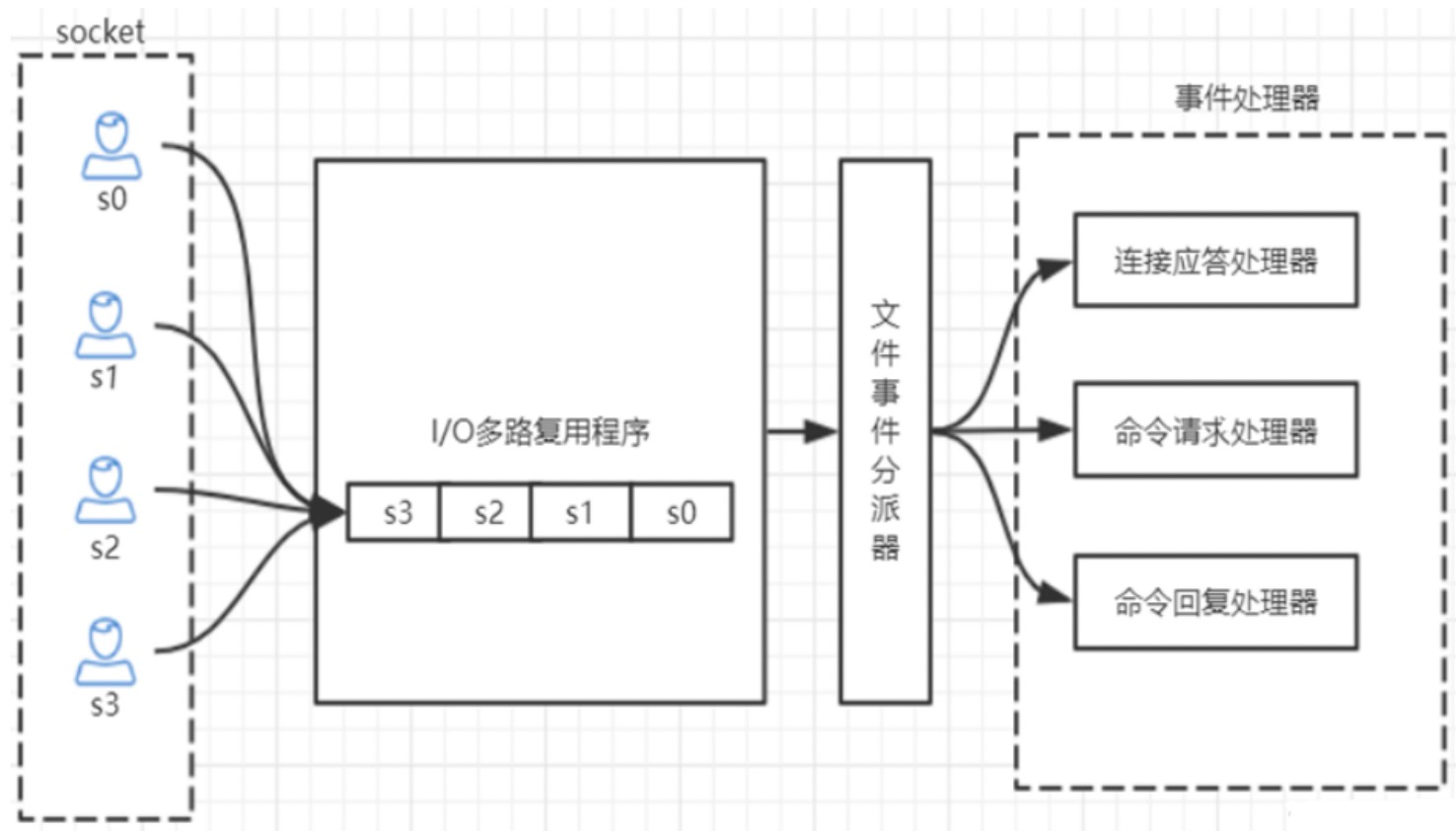

1、它所有的数据都在内存中,所有的运算都是内存级别的运算,而且单线程避免了多线程的切换性能损耗问题。因为 Redis 是单线程,所以要小心使用 Redis 指令,对于那些耗时的指令(比如 keys),一定要谨慎使用,一不小心就可能会导致 Redis 卡顿。

2、Redis完全基于内存,绝大部分请求是纯粹的内存操作,非常迅速,数据存在内存中。

3、数据结构简单,对数据操作也简单。

4、采用单线程,避免了不必要的上下文切换和竞争条件,不存在多线程导致的CPU切换,不用去考虑各种锁的问题,不存在加锁释放锁操作,没有死锁问题导致的性能消耗。

5、使用多路复用IO模型,非阻塞IO(redis利用epoll来实现IO多路复用,将连接信息和事件放到队列中,依次放到文件事件分派器,事件分派器将事件分发给事件处理器)

2、五大基本数据类型

2.1、redis-key

java

重要的:

exists name 判断key是否存在

expire name 10 设置过期时间

ttl name 查看过期时间的失效倒计时

===================================================

scan:渐进式遍历键

SCAN cursor [MATCH pattern] [COUNT count]

cursor: 整数值(hash桶的索引值)

MATCH pattern:key 的正则模式

COUNT count: 一次遍历的key的数量(参考值,底层遍历的数量不一定),并不是符合条件的结果数量

1、第一次遍历时,cursor值为 0,然后将返回结果中第一个整数值作为下一次遍历的 cursor。一直遍历 到返回的 cursor 值为 0 时结束。

2、 如果在scan的过程中如果有键的变化(增加、删除、修改),那么遍历效果可能会碰到如下问题: 新增的键可能没有遍历到,遍历出了重复的键等情况,也就是说scan并不能保证完整的遍历出来所有的键。

===================================================

Info:查看redis服务运行信息,分为 9 大块,每个块都有非常多的参数,这 9 个块分别是:

Server 服务器运行的环境参数

Clients 客户端相关信息

Memory 服务器运行内存统计数据

Persistence 持久化信息

Stats 通用统计数据

Replication 主从复制相关信息

CPU CPU 使用情况

Cluster 集群信息

KeySpace 键值对统计数量信息

127.0.0.1:6379> Info

# Server

redis_version:6.2.4

redis_git_sha1:00000000

redis_git_dirty:0

redis_build_id:462e443fe1573a8b

redis_mode:standalone

os:Linux 5.10.25-linuxkit x86_64

arch_bits:64

multiplexing_api:epoll

atomicvar_api:c11-builtin

gcc_version:8.3.0

process_id:1

process_supervised:no

run_id:4f735556d1b9cf041de4d7ac9c72205f45ff3ba6

tcp_port:6379

server_time_usec:1628276163065051

uptime_in_seconds:49745

uptime_in_days:0

hz:10

configured_hz:10

lru_clock:886211

executable:/data/redis-server

config_file:

io_threads_active:0

# Clients

connected_clients:1 # 正在连接的客户端数量

cluster_connections:0

maxclients:10000

client_recent_max_input_buffer:32

client_recent_max_output_buffer:0

blocked_clients:0

tracking_clients:0

clients_in_timeout_table:0

# Memory

used_memory:874456 # Redis分配的内存总量(byte),包含redis进程内部的开销和数据占用的内 存

used_memory_human:853.96K # Redis分配的内存总量(Kb,human会展示出单位)

used_memory_rss:7761920

used_memory_rss_human:7.40M # 向操作系统申请的内存大小(Mb)(这个值一般是大于used_memory的,因为Redis的内存分配策略会产生内存碎片)

used_memory_peak:893272 # redis的内存消耗峰值(byte)

used_memory_peak_human:872.34K # redis的内存消耗峰值(KB)

used_memory_peak_perc:97.89%

used_memory_overhead:830936

used_memory_startup:810048

used_memory_dataset:43520

used_memory_dataset_perc:67.57%

allocator_allocated:918336

allocator_active:1187840

allocator_resident:3883008

total_system_memory:2083807232

total_system_memory_human:1.94G

used_memory_lua:37888

used_memory_lua_human:37.00K

used_memory_scripts:0

used_memory_scripts_human:0B

number_of_cached_scripts:0

maxmemory:0 # 配置中设置的最大可使用内存值(byte),默认0,不限制

maxmemory_human:0B # 配置中设置的最大可使用内存值

maxmemory_policy:noeviction # 当达到maxmemory时的淘汰策略

allocator_frag_ratio:1.29

allocator_frag_bytes:269504

allocator_rss_ratio:3.27

allocator_rss_bytes:2695168

rss_overhead_ratio:2.00

rss_overhead_bytes:3878912

mem_fragmentation_ratio:9.33

mem_fragmentation_bytes:6930216

mem_not_counted_for_evict:108

mem_replication_backlog:0

mem_clients_slaves:0

mem_clients_normal:20512

mem_aof_buffer:112

mem_allocator:jemalloc-5.1.0

active_defrag_running:0

lazyfree_pending_objects:0

lazyfreed_objects:0

# Persistence

loading:0

current_cow_size:0

current_cow_size_age:0

current_fork_perc:0.00

current_save_keys_processed:0

current_save_keys_total:0

rdb_changes_since_last_save:0

rdb_bgsave_in_progress:0

rdb_last_save_time:1628261512

rdb_last_bgsave_status:ok

rdb_last_bgsave_time_sec:0

rdb_current_bgsave_time_sec:-1

rdb_last_cow_size:405504

aof_enabled:1

aof_rewrite_in_progress:0

aof_rewrite_scheduled:0

aof_last_rewrite_time_sec:-1

aof_current_rewrite_time_sec:-1

aof_last_bgrewrite_status:ok

aof_last_write_status:ok

aof_last_cow_size:0

module_fork_in_progress:0

module_fork_last_cow_size:0

aof_current_size:8715

aof_base_size:8551

aof_pending_rewrite:0

aof_buffer_length:0

aof_rewrite_buffer_length:0

aof_pending_bio_fsync:0

aof_delayed_fsync:0

# Stats

total_connections_received:1

total_commands_processed:29

instantaneous_ops_per_sec:0 # 每秒执行多少次指令

total_net_input_bytes:595

total_net_output_bytes:345

instantaneous_input_kbps:0.00

instantaneous_output_kbps:0.00

rejected_connections:0

sync_full:0

sync_partial_ok:0

sync_partial_err:0

expired_keys:0

expired_stale_perc:0.00

expired_time_cap_reached_count:0

expire_cycle_cpu_milliseconds:5434

evicted_keys:0

keyspace_hits:5

keyspace_misses:2

pubsub_channels:0

pubsub_patterns:0

latest_fork_usec:582

total_forks:2

migrate_cached_sockets:0

slave_expires_tracked_keys:0

active_defrag_hits:0

active_defrag_misses:0

active_defrag_key_hits:0

active_defrag_key_misses:0

tracking_total_keys:0

tracking_total_items:0

tracking_total_prefixes:0

unexpected_error_replies:0

total_error_replies:5

dump_payload_sanitizations:0

total_reads_processed:18

total_writes_processed:17

io_threaded_reads_processed:0

io_threaded_writes_processed:0

# Replication

role:master

connected_slaves:0

master_failover_state:no-failover

master_replid:291d8b9497669de4d1c0169b0b625199ffc00271

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:0

second_repl_offset:-1

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0

# CPU

used_cpu_sys:118.254109

used_cpu_user:34.796392

used_cpu_sys_children:0.009333

used_cpu_user_children:0.003860

used_cpu_sys_main_thread:118.237090

used_cpu_user_main_thread:34.797454

# Modules

# Errorstats

errorstat_ERR:count=4

errorstat_NOAUTH:count=1

# Cluster

cluster_enabled:0

# Keyspace

db0:keys=5,expires=0,avg_ttl=02.2、String(字符串)

2.2.1、api

2.2.2、api使用例子

java

127.0.0.1:6379> get views

(nil)

127.0.0.1:6379> incr views

(integer) 1

127.0.0.1:6379> incr views 自增1

(integer) 2

127.0.0.1:6379> get views

"2"

127.0.0.1:6379> get views

"2"

127.0.0.1:6379> decr views 自减1

(integer) 1

127.0.0.1:6379> INCRBY views 10 指定加多少(设置步长,指定增量)

(integer) 11

127.0.0.1:6379> DECRBY views 1 指定减多少(设置步长,指定减量)

(integer) 10

127.0.0.1:6379> DECRBY views 4

(integer) 6

===============================================

# 字符串范围 range

127.0.0.1:6379> set key "hello,zhz"

OK

127.0.0.1:6379> get key

"hello,zhz"

127.0.0.1:6379> GETRANGE key 0 1 截取字符串[0,1]

"he"

127.0.0.1:6379> GETRANGE key 0 -1 截取全部字符串和get key等值

"hello,zhz"

===============================================

# 替换

127.0.0.1:6379> set key2 ajksdashjdbas

OK

127.0.0.1:6379> get key2

"ajksdashjdbas"

127.0.0.1:6379> SETRANGE key2 1 xx 从第一个去替换

(integer) 13

127.0.0.1:6379> get key2

"axxsdashjdbas"

===============================================

# setex 设置过期时间

# setnx 不存在再设置(分布式锁)

127.0.0.1:6379> setex key3 30 hello 设置key3的值为hello,过期时间为30秒

OK

127.0.0.1:6379> ttl key3

(integer) 24

127.0.0.1:6379> get key3

"hello"

127.0.0.1:6379> ttl key3

(integer) 14

127.0.0.1:6379> setnx mykey "redis" 如果mykey不存在,创建mykey

(integer) 1

127.0.0.1:6379> keys *

1) "key2"

2) "key"

3) "mykey"

127.0.0.1:6379> setnx mykey "mongodb" 如果mykey存在,创建失败

(integer) 0

127.0.0.1:6379> get mykey

"redis"

===============================================

mset

mget

127.0.0.1:6379> mset k1 v1 k2 v2 k3 v3 同时设置多个值

OK

127.0.0.1:6379> keys *

1) "k3"

2) "k2"

3) "k1"

127.0.0.1:6379> mget k1 k2 k3 同时获取多个值

1) "v1"

2) "v2"

3) "v3"

127.0.0.1:6379> msetnx k1 v1 k4 v4 msetnx是一个原子性操作,要么一起成功,要么一起失败(分布式锁)

(integer) 0

127.0.0.1:6379> get k4

(nil)

# 对象

set user:1 (name:zhangsan,age:3) #设置一个user:1 对象值为json字符串来保存一个对象

# user:{id}:{filed},如此设计在redis中也是可以!

127.0.0.1:6379> mset user:1:name zhangsan user:1:age 2

OK

127.0.0.1:6379> mget user:1:name user:1:age

1) "zhangsan"

2) "2"

=================================================

getset #先get后set

127.0.0.1:6379> getset db redis #如果不存在值,则返回nil

(nil)

127.0.0.1:6379> get db

"redis"

127.0.0.1:6379> getset db mongodb #如果存在值,获取原来的值,并设置新的值

"redis"

127.0.0.1:6379> get db

"mongodb"2.2.3、使用场景

字符串常用操作

SET key value //存入字符串键值对

MSET key value [key value ...] //批量存储字符串键值对

SETNX key value //存入一个不存在的字符串键值对

GET key //获取一个字符串键值

MGET key [key ...] //批量获取字符串键值

DEL key [key ...] //删除一个键

EXPIRE key seconds //设置一个键的过期时间(秒)

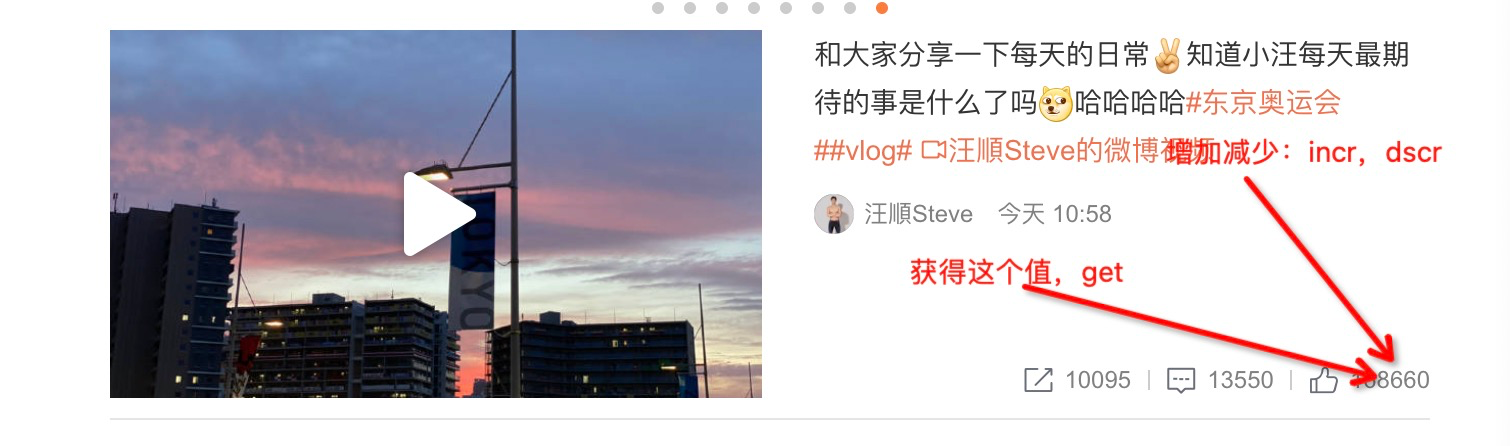

原子加减(value必须是integer)

INCR key //将key中储存的数字值加1

DECR key //将key中储存的数字值减1

INCRBY key increment //将key所储存的值加上increment

DECRBY key decrement //将key所储存的值减去decrement

单值缓存

SET key value

GET key

对象缓存

1) SET user:1 value(json格式数据)

2) MSET user:1:name zhz user:1:balance 1888

MGET user:1:name user:1:balance

分布式锁

SETNX product:10001 true //返回1代表获取锁成功

SETNX product:10001 true //返回0代表获取锁失败

。。。执行业务操作

DEL product:10001 //执行完业务释放锁

SET product:10001 true ex 10 nx //防止程序意外终止导致死锁

计数器

INCR article:readcount:{文章id}

GET article:readcount:{文章id}

Web集群session共享

spring session + redis实现session共享

分布式系统全局序列号(预热)

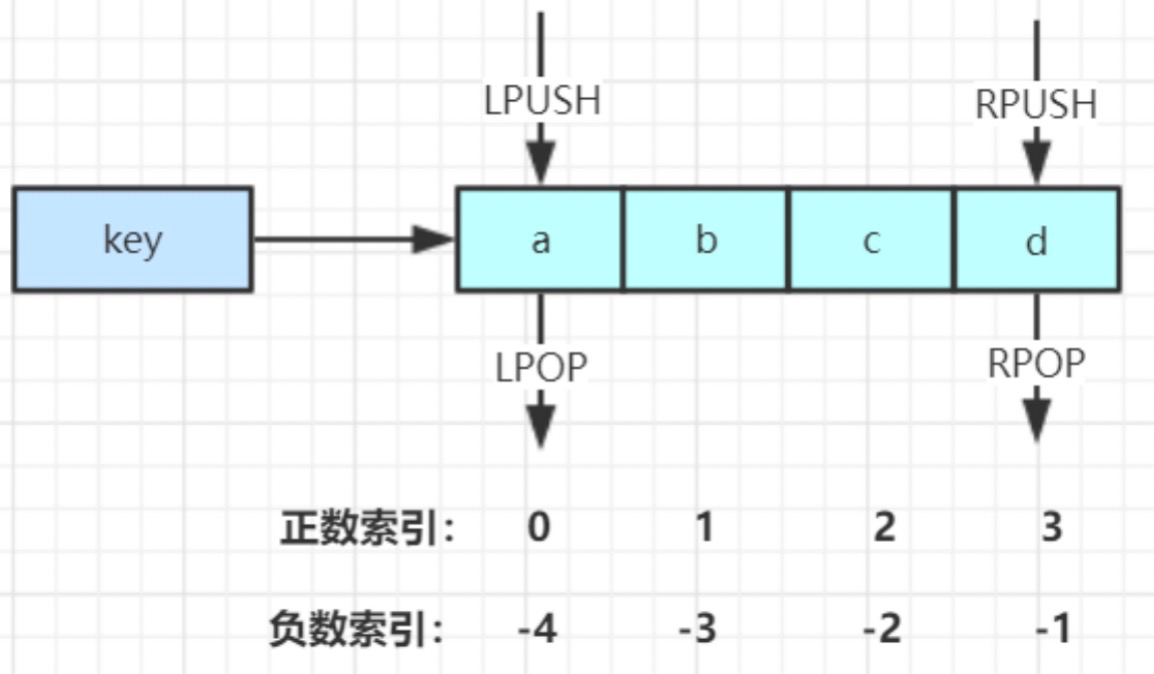

INCRBY orderId 1000 //redis批量生成序列号提升性能2.3、List

Redis列表是简单的字符串列表,按照插入顺序排序。你可以添加一个元素到列表的头部(左边)或者尾部(右边)。一个列表最多可以包含 232 - 1 个元素 (4294967295, 每个列表超过40亿个元素)。

2.3.1、api

2.3.2、api使用例子

java

=======================================

LPUSH 左插入

RPUSH 右插入

127.0.0.1:6379> lpush student one #将一个值或者多个值,插入到列表头部(左)

(integer) 1

127.0.0.1:6379> lpush student two

(integer) 2

127.0.0.1:6379> lpush student three

(integer) 3

127.0.0.1:6379> LRANGE student 0 -1 获取student中的值

1) "three"

2) "two"

3) "one"

127.0.0.1:6379> LRANGE student 0 1 通过区间获取具体的值

1) "three"

2) "two"

127.0.0.1:6379> rpush student right #将一个值或者多个值,插入到列表头部(右)

(integer) 4

127.0.0.1:6379> LRANGE list 0 -1

(empty list or set)

127.0.0.1:6379> LRANGE student 0 -1

1) "three"

2) "two"

3) "one"

4) "right"

========================================

lpop 左移除

rpop 右移除

127.0.0.1:6379> LRANGE student 0 -1 获取student中的值

1) "three"

2) "two"

3) "one"

4) "right"

127.0.0.1:6379> lpop student 左移除

"three"

127.0.0.1:6379> rpop student 右移除

"right"

127.0.0.1:6379> LRANGE student 0 -1 获取student中的值

1) "two"

2) "one"

==========================================

lindex 返回指定的下标的值

LRANGE 返回所有

127.0.0.1:6379> LRANGE student 0 -1

1) "two"

2) "one"

127.0.0.1:6379> lindex student 1

"one"

127.0.0.1:6379> lindex student 0

"two"

===========================================

llen 返回列表的长度

127.0.0.1:6379> llen student

(integer) 2

===========================================

移除指定的值

127.0.0.1:6379> lpush student three 左插入

(integer) 3

127.0.0.1:6379> lpush student three

(integer) 4

127.0.0.1:6379> LRANGE student 0 -1 查所有

1) "three"

2) "three"

3) "two"

4) "one"

127.0.0.1:6379> lrem student 1 one 移除student集合中指定个数的value,精确匹配

(integer) 1

127.0.0.1:6379> LRANGE student 0 -1 查所有

1) "three"

2) "three"

3) "two"

127.0.0.1:6379> lrem student 1 three

(integer) 1

127.0.0.1:6379> LRANGE student 0 -1

1) "three"

2) "two"

127.0.0.1:6379> lpush student three

(integer) 3

127.0.0.1:6379> LRANGE student 0 -1

1) "three"

2) "three"

3) "two"

127.0.0.1:6379> lrem student 2 three

(integer) 2

127.0.0.1:6379> LRANGE student 0 -1

1) "two"

=====================================

trim 修剪,list截断

127.0.0.1:6379> rpush mylist "hello"

(integer) 1

127.0.0.1:6379> rpush mylist "hello1"

(integer) 2

127.0.0.1:6379> rpush mylist "hello2"

(integer) 3

127.0.0.1:6379> rpush mylist "hello3"

(integer) 4

127.0.0.1:6379> ltrim mylist 1 2 通过下标截取指定的长度这个list会被改变,只剩下截取的元素

OK

127.0.0.1:6379> lrange mylist 0 -1

1) "hello1"

2) "hello2"

=====================================

rpoplpush 移除列表的最后一个元素,将他移动到新的列表中

127.0.0.1:6379> rpush mylist "hello"

(integer) 1

127.0.0.1:6379> rpush mylist "hello1"

(integer) 2

127.0.0.1:6379> rpush mylist "hello2"

(integer) 3

127.0.0.1:6379> rpush mylist "hello3"

(integer) 4

127.0.0.1:6379> rpush mylist "hello4"

(integer) 5

127.0.0.1:6379> rpoplpush mylist myotherlist 移除列表的最后一个元素,将他移动到新的列表中

"hello4"

127.0.0.1:6379> lrange mylist 0 -1 查询源的list

1) "hello"

2) "hello1"

3) "hello2"

4) "hello3"

127.0.0.1:6379> lrange myotherlist 0 -1 查询目标的list

1) "hello4"

=====================================

lset 将列表中指定下表的值替换为另一个值,更新操作

127.0.0.1:6379> EXISTS list 判断这个列表是否存在

(integer) 0

127.0.0.1:6379> lset list 0 item 如果不存在列表去更新就会报错

(error) ERR no such key

127.0.0.1:6379> lpush list value1

(integer) 1

127.0.0.1:6379> lrange list 0 -1

1) "value1"

127.0.0.1:6379> lset list 0 item 如果存在久替换

OK

127.0.0.1:6379> lrange list 0 -1

1) "item"

127.0.0.1:6379> lset list 1 item1 如果不存在列表去更新就会报错

(error) ERR index out of range

=====================================

linsert 将某个具体的value插入到列中某个元素的前面或者后面

127.0.0.1:6379> rpush mylist "hello"

(integer) 1

127.0.0.1:6379> rpush mylist "world"

(integer) 2

127.0.0.1:6379> linsert mylist before "world" "other" 把othre插入到mylist中的world的前面

(integer) 3

127.0.0.1:6379> lrange mylist 0 -1

1) "hello"

2) "other"

3) "world"

127.0.0.1:6379> linsert mylist after world new 把new插入到mylist中的world的后面

(integer) 4

127.0.0.1:6379> lrange mylist 0 -1

1) "hello"

2) "other"

3) "world"

4) "new"2.3.3、使用场景

List常用操作

LPUSH key value [value ...] //将一个或多个值value插入到key列表的表头(最左边)

RPUSH key value [value ...] //将一个或多个值value插入到key列表的表尾(最右边)

LPOP key //移除并返回key列表的头元素

RPOP key //移除并返回key列表的尾元素

LRANGE key start stop //返回列表key中指定区间内的元素,区间以偏移量start和stop指定

BLPOP key [key ...] timeout //从key列表表头弹出一个元素,若列表中没有元素,阻塞等待timeout秒,如果timeout=0,一直阻塞等待

BRPOP key [key ...] timeout //从key列表表尾弹出一个元素,若列表中没有元素,阻塞等待timeout秒,如果timeout=0,一直阻塞等待

使用场景:

栈(lpush,lpop)

消息队列(lpush,rpop)

阻塞队列(push + brpop)

微博和微信公号消息流

微博消息和微信公号消息

zhz关注了码农开花,快学Java

1)码农开花发微信公号消息,消息ID为10018

LPUSH msg:{zhz-ID} 10018

2)快学Java发微信公号消息,消息ID为10086

LPUSH msg:{zhz-ID} 10086

3)查看最新微信公号消息

LRANGE msg:{zhz-ID} 0 4

热点数据存储

最新评论,最新文章列表,使用list 存储,ltrim取出热点数据,删除老数据。2.3.4、小结

- list本质上就是一个链表,before node after,left,right 都可以插入值

- 如果key不存在,创建新的链表

- 如果key存在,新增内容

- 如果移除了所有值,空链表,也代表不存在

- 在两边插入或者改动值,效率最高!中间插入,效率低

2.4、Set(集合)

特点:

- set的值不能重复

- 无序不重复集合

2.4.1、api

2.4.2、api使用例子

java

======================================================================

sadd 集合中添加元素

smembers 查看指定set的所有值

sismember 判断某一个值是不是在set集合中 0 不在, 1 在

127.0.0.1:6379> sadd myset "hello" 集合中添加元素

(integer) 1

127.0.0.1:6379> sadd myset "zhz"

(integer) 1

127.0.0.1:6379> sadd myset "lovezhz"

(integer) 1

127.0.0.1:6379> smembers myset 查看指定set的所有值

1) "zhz"

2) "lovezhz"

3) "hello"

127.0.0.1:6379> sismember myset hello 判断某一个值是不是在set集合中

(integer) 1

127.0.0.1:6379> sismember myset world 判断某一个值是不是在set集合中

(integer) 0

======================================================================

scard 获取集合中的内存元素个数

127.0.0.1:6379> scard myset 获取集合中的内存个数

(integer) 3

======================================================================

srem 移除set集合中的一个或多个元素

127.0.0.1:6379> srem myset hello 移除set集合中的一个或多个元素

(integer) 1

127.0.0.1:6379> smembers myset

1) "zhz"

2) "lovezhz"

127.0.0.1:6379> scard myset

(integer) 2

======================================================================

srandmember 随机返回集合中的某个元素,或多个元素

127.0.0.1:6379> srandmember myset

"666"

127.0.0.1:6379> srandmember myset 随机返回集合中的某个元素

"888"

127.0.0.1:6379> srandmember myset

"zhz"

127.0.0.1:6379> srandmember myset 2 随机返回集合中的多个元素

1) "zhz"

2) "888"

======================================================================

spop 随机删除指定集合中的元素

127.0.0.1:6379> spop myset 随机删除指定集合中的元素

"bai"

127.0.0.1:6379> spop myset

"lovezhz"

127.0.0.1:6379> smembers myset

1) "zhz"

2) "666"

3) "888"

4) "xiao"

5) "didi"

======================================================================

smove 将一个指定的值移动到另一个set集合中

127.0.0.1:6379> sadd myset "hello"

(integer) 1

127.0.0.1:6379> sadd myset "world"

(integer) 1

127.0.0.1:6379> sadd myset "zhz"

(integer) 1

127.0.0.1:6379> sadd myset "666"

(integer) 1

127.0.0.1:6379> sadd myset "888"

(integer) 1

127.0.0.1:6379> sadd myset "000"

(integer) 1

127.0.0.1:6379> smembers myset

1) "666"

2) "world"

3) "hello"

4) "zhz"

5) "000"

6) "888"

127.0.0.1:6379> sadd myset2 "111"

(integer) 1

127.0.0.1:6379> smembers myset2

1) "111"

127.0.0.1:6379> smove myset myset2 "666"

(integer) 1

127.0.0.1:6379> smove myset myset2 "000"

(integer) 1

127.0.0.1:6379> smove myset myset2 "888"

(integer) 1

127.0.0.1:6379> smembers myset

1) "zhz"

2) "hello"

3) "world"

127.0.0.1:6379> smembers myset2

1) "888"

2) "666"

3) "000"

4) "111"

======================================================================

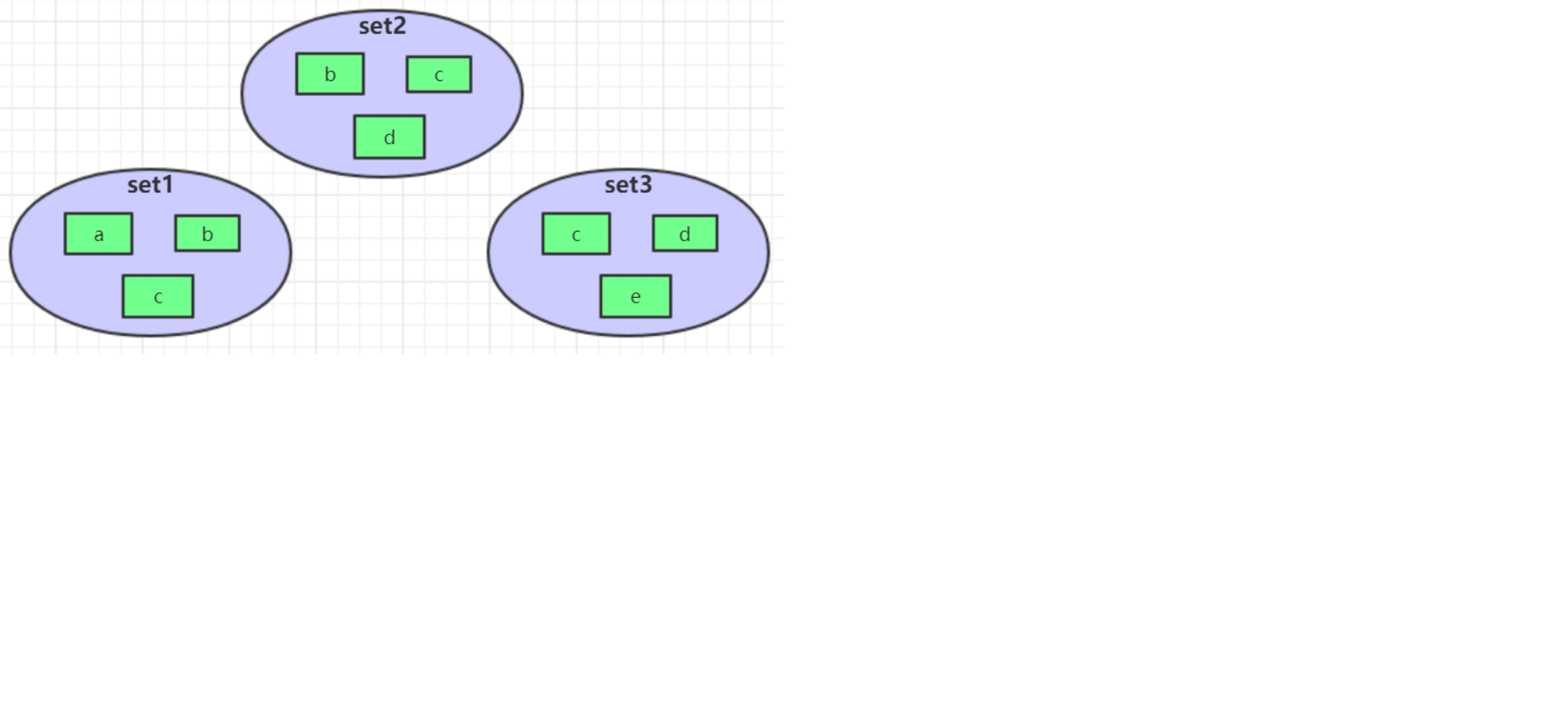

微博,B站,共同关注(并集)

数字集合类:

- 差集 sdiff 返回的是前一个集合的的差集

- 交集 sinter

- 并集 sunion

127.0.0.1:6379> sadd key1 a

(integer) 1

127.0.0.1:6379> sadd key1 b

(integer) 1

127.0.0.1:6379> sadd key1 c

(integer) 1

127.0.0.1:6379> sadd key1 d

(integer) 1

127.0.0.1:6379> sadd key2 d

(integer) 1

127.0.0.1:6379> sadd key2 e

(integer) 1

127.0.0.1:6379> sadd key2 f

(integer) 1

127.0.0.1:6379> sadd key2 g

(integer) 1

127.0.0.1:6379> smembers key1

1) "d"

2) "b"

3) "a"

4) "c"

127.0.0.1:6379> smembers key2

1) "d"

2) "g"

3) "f"

4) "e"

127.0.0.1:6379> sdiff key1 key2 差集

1) "b"

2) "a"

3) "c"

127.0.0.1:6379> sdiff key2 key1 差集

1) "g"

2) "f"

3) "e"

127.0.0.1:6379> sinter key1 key2 交集

1) "d"

127.0.0.1:6379> sunion key1 key2 并集

1) "d"

2) "g"

3) "e"

4) "b"

5) "a"

6) "c"

7) "f"2.4.3、使用场景

微博,A用户将所有关注的人放在一个set集合中!将他的粉丝也放在一个集合中!

共同关注,共同爱好,二度好友,推荐好友!(六度分割理论)

Set常用操作

SADD key member [member ...] //往集合key中存入元素,元素存在则忽略,若key不存在则新建

SREM key member [member ...] //从集合key中删除元素

SMEMBERS key //获取集合key中所有元素

SCARD key //获取集合key的元素个数

SISMEMBER key member //判断member元素是否存在于集合key中

SRANDMEMBER key [count] //从集合key中选出count个元素,元素不从key中删除

SPOP key [count] //从集合key中选出count个元素,元素从key中删除

Set运算操作

SINTER key [key ...] //交集运算

SINTERSTORE destination key [key ..]. //将交集结果存入新集合destination中

SUNION key [key ..] //并集运算

SUNIONSTORE destination key [key ...] //将并集结果存入新集合destination中

SDIFF key [key ...] //差集运算

SDIFFSTORE destination key [key ...]. //将差集结果存入新集合destination中

微信抽奖小程序

1)点击参与抽奖加入集合

SADD key {userlD}

2)查看参与抽奖所有用户

SMEMBERS key

3)抽取count名中奖者

SRANDMEMBER key [count] / SPOP key [count]

微信微博点赞,收藏,标签

1) 点赞

SADD like:{消息ID} {用户ID}

2) 取消点赞

SREM like:{消息ID} {用户ID}

3) 检查用户是否点过赞

SISMEMBER like:{消息ID} {用户ID}

4) 获取点赞的用户列表

SMEMBERS like:{消息ID}

5) 获取点赞用户数

SCARD like:{消息ID}

SINTER set1 set2 set3 -> { c }

SUNION set1 set2 set3 -> { a,b,c,d,e }

SDIFF set1 set2 set3 -> { a } 集合操作实现微博微信关注模型

集合操作实现微博微信关注模型

- 小A关注的人: ASet->

- D关注的人: DSet-->

- B关注的人: BSet-> {A, D, E, C, F)

- 我和D共同关注: SINTER ASet DSet-->

- 我关注的人也关注他(DSet): SISMEMBER BSet DSet SISMEMBER CSet DSet

- 我可能认识的人: SDIFF DSet ASet->(A, E}

集合操作实现微博微信关注模型

1) 小A关注的人:

ASet-> {B, C}

2) D关注的人:

DSet--> {A, E, B, C}

3) B关注的人:

BSet-> {A, D, E, C, F)

4) 我和D共同关注:

SINTER ASet DSet--> {B, C}

5) 我关注的人也关注他(DSet):

SISMEMBER BSet DSet

SISMEMBER CSet DSet

6) 我可能认识的人:

SDIFF DSet ASet->(A, E}

集合操作实现电商商品筛选

SADD brand:huawei P40

SADD brand:xiaomi mi-10

SADD brand:iPhone iphone12

SADD os:android P40 mi-10

SADD cpu:brand:intel P40 mi-10

SADD ram:8G P40 mi-10 iphone12

SINTER os:android cpu:brand:intel ram:8G -> {P40,mi-10}

2.5、Hash

- Redis hash 是一个 string 类型的 field(字段) 和 value(值) 的映射表,hash 特别适合用于存储对象。

- Redis 中每个 hash 可以存储 232 - 1 键值对(40多亿)。

- 本质上跟string差不多

2.5.1、api

2.5.2、api使用例子

java

===================================================

hset # set一个具体的key-map

hget # 获取一个字段值

hmset # set设置多个key-map

hmget # 获取多个字段值

hgetall # 获取对应的key的所有的值

127.0.0.1:6379> hset myhash field1 zhz

(integer) 0

127.0.0.1:6379> hset myhash field1 zhz

(integer) 0

127.0.0.1:6379> flushall

OK

127.0.0.1:6379> hset myhash field1 zhz

(integer) 1

127.0.0.1:6379> hget myhash field1

"zhz"

127.0.0.1:6379> hmset myhash field1 hello field2 world

OK

127.0.0.1:6379> hmget myhash field1 field2

1) "hello"

2) "world"

127.0.0.1:6379> hgetall myhash

1) "field1"

2) "hello"

3) "field2"

4) "world"

===================================================

hdel # 删除指定的key对应的map中的key

127.0.0.1:6379> hdel myhash field1

(integer) 1

127.0.0.1:6379> hgetall myhash

1) "field2"

2) "world"

===================================================

hlen 获取有多少个key对应的map中的key(也就是获取map的字段数量)

127.0.0.1:6379> hmset myhash field1 hello field2 world

OK

127.0.0.1:6379> hgetall myhash

1) "field1"

2) "hello"

3) "field2"

4) "world"

127.0.0.1:6379> hlen myhash

(integer) 2

===================================================

hexists # 判断map中指定字段是否存在,存在1 ,不存在0

127.0.0.1:6379> hexists myhash field1

(integer) 1

127.0.0.1:6379> hexists myhash field3

(integer) 0

===================================================

hkeys # 只获得所有的field

hvals # 只获得所有的value

127.0.0.1:6379> hkeys myhash 只获得所有的field

1) "field1"

2) "field2"

127.0.0.1:6379> hvals myhash 只获得所有的field

1) "hello"

2) "world"

===================================================

hincrby # 指定增量

hdecrby # 指定减量

hsetnx # 如果不存在则可以设置,如果存在则不可以设置

127.0.0.1:6379> hset myhash zhz 1

(integer) 1

127.0.0.1:6379> hincrby myhash zhz 1

(integer) 2

127.0.0.1:6379> hdecrby myhash zhz -9

(integer) -7

127.0.0.1:6379> hincrby myhash zhz 10

(integer) 3

127.0.0.1:6379> hincrby myhash zhz 19

(integer) 22

127.0.0.1:6379> hsetnx myhash field4 hello

(integer) 12.5.3、使用场景

Hash常用操作

HSET key field value //存储一个哈希表key的键值

HSETNX key field value //存储一个不存在的哈希表key的键值

HMSET key field value [field value ...] //在一个哈希表key中存储多个键值对

HGET key field //获取哈希表key对应的field键值

HMGET key field [field ...] //批量获取哈希表key中多个field键值

HDEL key field [field ...] //删除哈希表key中的field键值

HLEN key //返回哈希表key中field的数量

HGETALL key //返回哈希表key中所有的键值

HINCRBY key field increment //为哈希表key中field键的值加上增量increment

对象缓存

HMSET user {userId}:name zhz {userId}:age 21

HMSET user 1:name zzz 1:age 21 2:name hyf 2:age 23

HMGET user 1:name 1:age

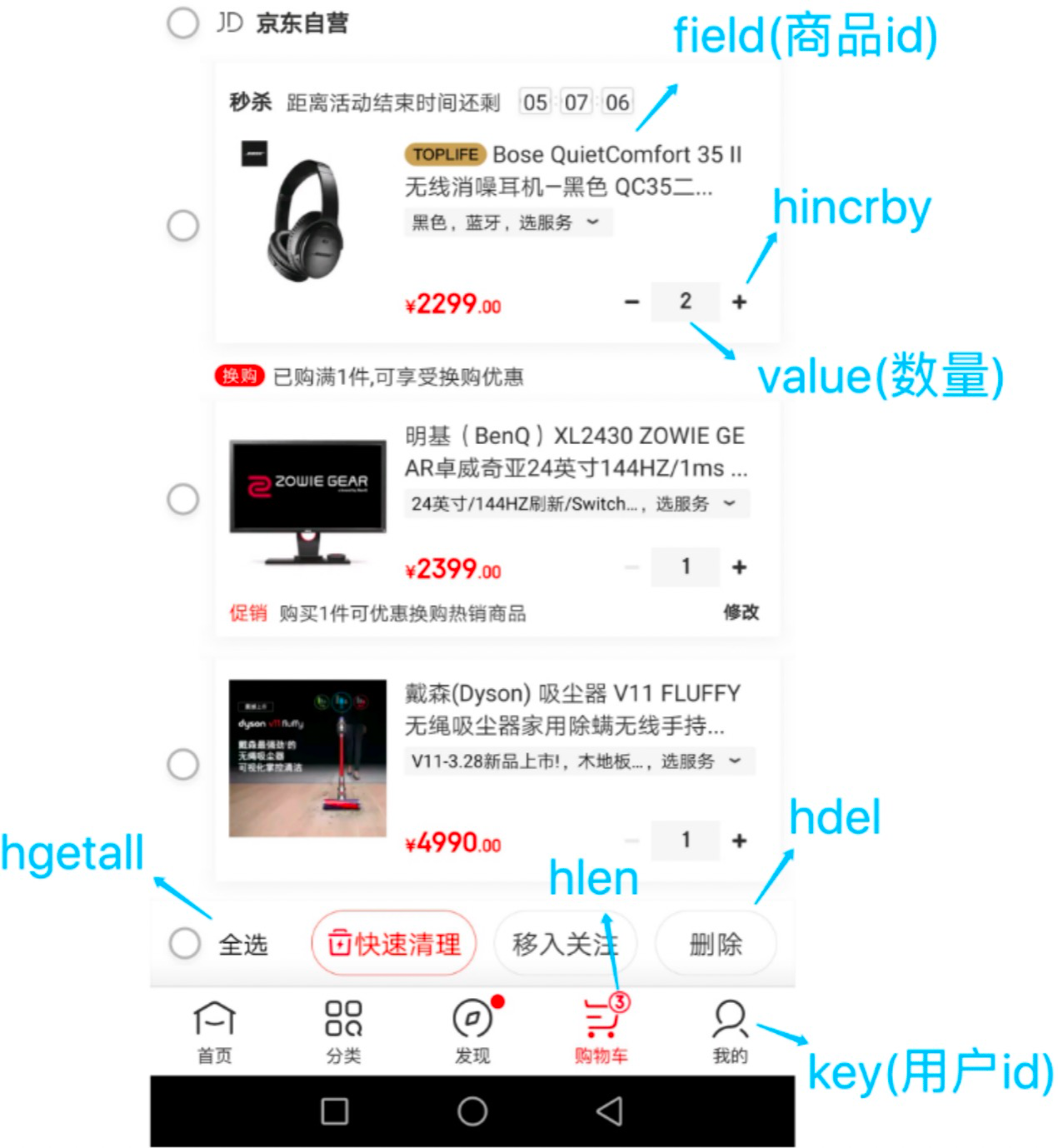

电商购物车

1)以用户id为key

2)商品id为field

3)商品数量为value

购物车操作

1) 添加商品 -> hset cart:1001 10088 1

2) 增加数量 -> hincrby cart:1001 10088 1

3) 商品总数 -> hlen cart:1001

4) 删除商品 -> hdel cart:1001 10088

5) 获取购物车所有商品 -> hgetall cart:1001

2.5.4、优缺点

优点 1)同类数据归类整合储存,方便数据管理 2)相比string操作消耗内存与cpu更小 3)相比string储存更节省空间

缺点

1)过期功能不能使用在field上,只能用在key上 2)Redis集群架构下不适合大规模使用

2.6、Zset

- Redis 有序集合和集合一样也是 string 类型元素的集合,且不允许重复的成员。

- 不同的是每个元素都会关联一个 double 类型的分数。redis 正是通过分数来为集合中的成员进行从小到大的排序。

- 有序集合的成员是唯一的,但分数(score)却可以重复。

- 集合是通过哈希表实现的,所以添加,删除,查找的复杂度都是 O(1)。 集合中最大的成员数为 232 - 1 (4294967295, 每个集合可存储40多亿个成员)。

- 在set的基础上,增加了一个值,set k1 v1 -> zset k1 score1 v1

2.6.1、api

2.6.2、api使用例子

java

==============================================================

zadd 添加元素

zrange 随机查看所有元素

zrangebyscore 有序查看所有元素(可以带成绩,具体看下面示例)

zrevrange # 从大到小排序

127.0.0.1:6379> zadd grade 10 zhz1 # 添加一个元素

(integer) 1

127.0.0.1:6379> zadd grade 30 zhz2 # 添加一个元素

(integer) 1

127.0.0.1:6379> zadd grade 50 zhz3 # 添加一个元素

(integer) 1

127.0.0.1:6379> zadd grade 70 zhz4 # 添加一个元素

(integer) 1

127.0.0.1:6379> zadd grade 90 zhz5 # 添加一个元素

(integer) 1

127.0.0.1:6379> zadd grade 20 hyf 40 hxm 添加多个元素

(integer) 2

127.0.0.1:6379> zrange grade 0 -1 # 通过索引获取区间在内的值

1) "zhz1"

2) "hyf"

3) "zhz2"

4) "hxm"

5) "zhz3"

6) "zhz4"

7) "zhz5"

127.0.0.1:6379> zrangebyscore grade -inf +inf # 显示全部的用户,从小到大

1) "zhz1"

2) "hyf"

3) "zhz2"

4) "hxm"

5) "zhz3"

6) "zhz4"

7) "zhz5"

127.0.0.1:6379> zrevrange grade 0 -1 # 从大到小排序

1) "zhz5"

2) "zhz4"

3) "zhz3"

4) "zhz2"

5) "zhz1"

127.0.0.1:6379> zrangebyscore grade -inf +inf withscores # 显示全部的用户并且有成绩,从小到大

1) "zhz1"

2) "10"

3) "hyf"

4) "20"

5) "zhz2"

6) "30"

7) "hxm"

8) "40"

9) "zhz3"

10) "50"

11) "zhz4"

12) "70"

13) "zhz5"

14) "90"

127.0.0.1:6379> zrangebyscore grade -inf 50 withscores # 显示[-负无穷,50]的值的用户并且有成绩,从小到大

1) "zhz1"

2) "10"

3) "hyf"

4) "20"

5) "zhz2"

6) "30"

7) "hxm"

8) "40"

9) "zhz3"

10) "50"

==============================================================

zrem 移除zset中的元素

127.0.0.1:6379> zrem grade hyf # 移除有序集合中的指定元素

(integer) 1

127.0.0.1:6379> zrem grade hxm

(integer) 1

127.0.0.1:6379> zrange grade 0 -1

1) "zhz1"

2) "zhz2"

3) "zhz3"

4) "zhz4"

5) "zhz5"

127.0.0.1:6379> zcard grade # 获取有序集合中的个数

(integer) 5

==============================================================

zcount # 获取指定区别的成员数量!

127.0.0.1:6379> zadd myzset 1 hello

(integer) 1

127.0.0.1:6379> zadd myzset 2 world

(integer) 1

127.0.0.1:6379> zadd myzset 3 zhz 4 hyf

(integer) 2

127.0.0.1:6379> zcount myzset 1 3 # 获取指定区别的成员数量!

(integer) 3

127.0.0.1:6379> zcount myzset 1 5 # 获取指定区别的成员数量!

(integer) 4

127.0.0.1:6379> zcount myzset 1 2 # 获取指定区别的成员数量!

(integer) 22.6.3、使用场景

应用场景:

- set 排序 存储班级成绩表,工资表排序

- 普通消息 1,重要消息 2,带权重的进行判断

- 排行榜应用实现,取 TopN

ZSet常用操作

ZADD key score member [[score member]…] //往有序集合key中加入带分值元素

ZREM key member [member …] //从有序集合key中删除元素

ZSCORE key member //返回有序集合key中元素member的分值

ZINCRBY key increment member //为有序集合key中元素member的分值加上increment

ZCARD key //返回有序集合key中元素个数

ZRANGE key start stop [WITHSCORES] //正序获取有序集合key从start下标到stop下标的元素

ZREVRANGE key start stop [WITHSCORES] //倒序获取有序集合key从start下标到stop下标的元素

Zset集合操作

ZUNIONSTORE destkey numkeys key [key ...] //并集计算

ZINTERSTORE destkey numkeys key [key …] //交集计算

延迟队列

使用sorted_set,使用 【当前时间戳 + 需要延迟的时长】做score, 消息内容作为元素,调用zadd来生产消息,消费者使用zrangbyscore获取当前时间之前的数据做轮询处理。消费完再删除任务 rem key member

Zset集合操作实现排行榜(微博热门排行榜)

1)点击新闻

ZINCRBY hotNews:20190819 1 吴亦凡入狱

2)展示当日排行前十

ZREVRANGE hotNews:20190819 0 9 WITHSCORES

3)七日搜索榜单计算

ZUNIONSTORE hotNews:20190813-20190819 7

hotNews:20190813 hotNews:20190814... hotNews:20190819

4)展示七日排行前十

ZREVRANGE hotNews:20190813-20190819 0 9 WITHSCORES3、特殊数据类型

3.1、Geospatial(GEO)

经度纬度在线查询:http://www.jsons.cn/lngcode/

定义:

- Redis GEO 主要用于存储地理位置信息,并对存储的信息进行操作,该功能在 Redis 3.2 版本新增。

底层原理:就是zset,可以用zset命令去操作geo

使用场景:

- 朋友定位

- 附近的人

- 打车距离计算

- 推算地理位置信息,两地之间的距离,方圆百里的人

api:

- Redis GEO 操作方法有:

- geoadd:

- 添加地理位置的坐标。

- GEOADD 命令将给定的空间元素(纬度、经度、名字)添加到指定的键里面。这些数据会以有序集合的形式被储存在键里面,从而使得像 GEORADIUS 和 GEORADIUSBYMEMBER 这样的命令可以在之后通过位置查询取得这些元素。

- GEOADD 命令以标准的 x,y 格式接受参数,所以用户必须先输入经度,然后再输入纬度。GEOADD 能够记录的坐标是有限的,非常接近两极的区域是无法被索引的。精确的坐标限制由 EPSG:900913/EPSG:3785/OSGEO:41001 等坐标系统定义,具体如下:

- 有效的经度介于-180度至180度之间。

- 有效的纬度介于-85.05112878度至85.05112878度之间。

- 两级无法添加

- geoadd:

当用户尝试输入一个超出范围的经度或者纬度时,GEOADD 命令将返回一个错误。

Redis 里面没有 GEODEL 命令,你可以用 ZREM 命令来删除指定 key 的相应元素,因为 geo 索引结构本质上是一个有序集合。

# getadd 添加地理位置

127.0.0.1:6379> geoadd china:city 116.40 39.90 beijing

(integer) 1

127.0.0.1:6379> geoadd china:city 121.47 31.23 shanghai

(integer) 1

127.0.0.1:6379> geoadd china:city 106.50 29.53 chongqing

(integer) 1

127.0.0.1:6379> geoadd china:city 114.05 22.52 shenzhen

(integer) 1

127.0.0.1:6379> geoadd china:city 120.16 30.24 hangzhou 108.96 34.26 xian

(integer) 2geopos:获取地理位置的坐标。获得当前定位,坐标值

127.0.0.1:6379> geopos china:city beijing # 获取指定的城市的经度和纬度

1) 1) "116.39999896287918091"

2) "39.90000009167092543"

127.0.0.1:6379> geopos china:city beijing chongqing

1) 1) "116.39999896287918091"

2) "39.90000009167092543"

2) 1) "106.49999767541885376"

2) "29.52999957900659211"geodist:计算两个位置之间的距离。

两位之间的距离! 单位:默认是米(meter)

- m表示单位为米

- km表示单位为千米

- mi表示单位为英里

- ft表示单位为英尺

java

127.0.0.1:6379> geodist china:city beijing shanghai km # 查看上海到北京的直线距离

"1067.3788"

127.0.0.1:6379> geodist china:city beijing shenzhen km

"1945.7881"georadius:根据用户给定的经纬度坐标来获取指定范围内的地理位置集合。(以给定的经纬度为中心,找出某一半径内的元素)

- 附近的人(获得所有附近的人的地址,定位!)通过半径来查询

- 获取指定数量的人

- 所有数据应该都录入:china:city,才会让结果更加请求

java

127.0.0.1:6379> georadius china:city 110 30 1000 km # 以110,30 这个经纬度为中心,寻找方圆1000km内的城市

1) "chongqing"

2) "xian"

3) "shenzhen"

4) "hangzhou"

127.0.0.1:6379> georadius china:city 110 30 500 km

1) "chongqing"

2) "xian"

127.0.0.1:6379> georadius china:city 110 30 500 km withdist # 显示到中间距离的位置

1) 1) "chongqing"

2) "341.9374"

2) 1) "xian"

2) "483.8340"

127.0.0.1:6379> georadius china:city 110 30 500 km withcoord # 显示他人的定位信息

1) 1) "chongqing"

2) 1) "106.49999767541885376"

2) "29.52999957900659211"

2) 1) "xian"

2) 1) "108.96000176668167114"

2) "34.25999964418929977"

127.0.0.1:6379> georadius china:city 110 30 500 km withdist withcoord count 1 # 筛选出指定的结果!

1) 1) "chongqing"

2) "341.9374"

3) 1) "106.49999767541885376"

2) "29.52999957900659211"

127.0.0.1:6379> georadius china:city 110 30 500 km withdist withcoord count 2

1) 1) "chongqing"

2) "341.9374"

3) 1) "106.49999767541885376"

2) "29.52999957900659211"

2) 1) "xian"

2) "483.8340"

3) 1) "108.96000176668167114"

2) "34.25999964418929977"georadiusbymember:根据储存在位置集合里面的某个地点获取指定范围内的地理位置集合。

java

# 找出位于指定元素周围的其他元素

127.0.0.1:6379> georadiusbymember china:city beijing 1000 km

1) "beijing"

2) "xian"

127.0.0.1:6379> georadiusbymember china:city shanghai 1000 km

1) "hangzhou"

2) "shanghai"geohash:返回一个或多个位置元素的 geohash 值。

- 该命令将返回11个字符的Geohash字符串!

java

# 将二维码的经纬度转换为一维的字符串,如果两个字符串越接近,那么则距离越近

127.0.0.1:6379> geohash china:city beijing chongqing

1) "wx4fbxxfke0"

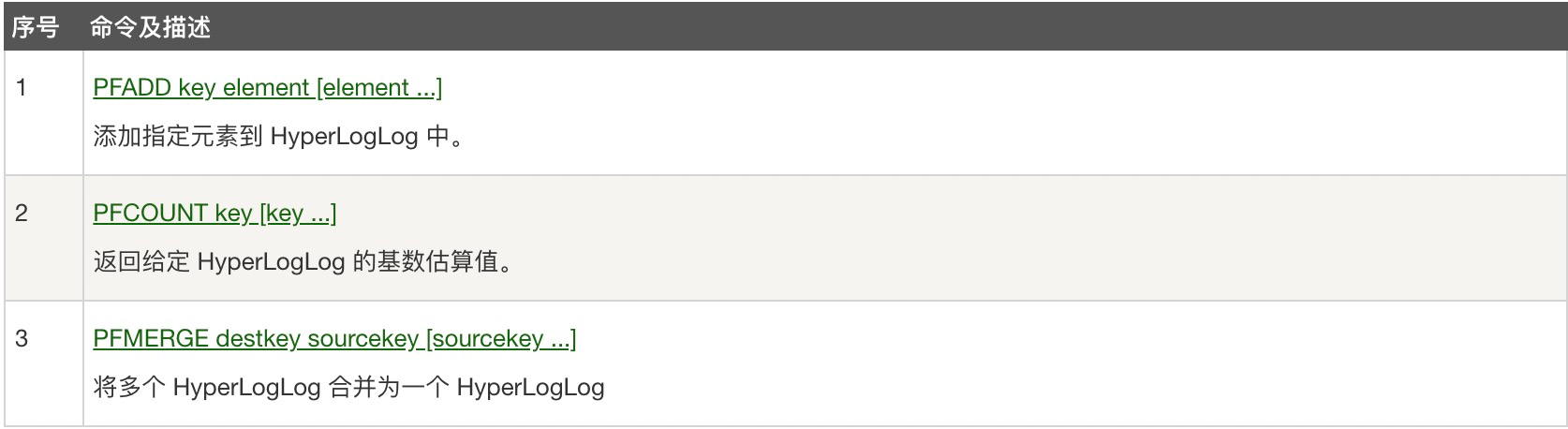

2) "wm5xzrybty0"3.2、HyperLogLog

- Redis HyperLogLog 是用来做基数统计的算法,HyperLogLog 的优点是,在输入元素的数量或者体积非常非常大时,计算基数所需的空间总是固定 的、并且是很小的。

- 在 Redis 里面,每个 HyperLogLog 键只需要花费 12 KB 内存,就可以计算接近 2^64 个不同元素的基 数。这和计算基数时,元素越多耗费内存就越多的集合形成鲜明对比。

- 但是,因为 HyperLogLog 只会根据输入元素来计算基数,而不会储存输入元素本身,所以 HyperLogLog 不能像集合那样,返回输入的各个元素。

什么是基数?

A(1,3,5,6,7,8,7)

B(1,3,5,7,8)

基数(不重复的元素)=5 可以接受误差

定义:

- Redis 在 2.8.9 版本添加了 HyperLogLog 结构。

- Redis HyperLogLog 是用来做基数统计的算法,HyperLogLog 的优点是,在输入元素的数量或者体积非常非常大时,计算基数所需的空间总是固定 的、并且是很小的。

- 在 Redis 里面,每个 HyperLogLog 键只需要花费 12 KB 内存,就可以计算接近 2^64 个不同元素的基 数。这和计算基数时,元素越多耗费内存就越多的集合形成鲜明对比。

- 但是,因为 HyperLogLog 只会根据输入元素来计算基数,而不会储存输入元素本身,所以 HyperLogLog 不能像集合那样,返回输入的各个元素。

场景:

- 网页的UV(一个人访问一个网站多次,但是还是算作一个人)

- 传统的方式,set保存用户的id,可以作为统计set中的元素数量;然而这个方式会保存大量的用户id,会很麻烦!我们的目标是保存用户id,0.81%的错误率!统计UV任务,可以忽略不计

- 如果允许容错,那么一定可以用HyperLogLog!

- 如果不允许容错,就使用set或者自己的数据类型

使用:

127.0.0.1:6379> pfadd zhz 1a b c d e f g h i j # 创建第一组元素zhz

(integer) 1

127.0.0.1:6379> pfcount zhz # 统计zhz元素的基数数量

(integer) 10

127.0.0.1:6379> pfadd zhz1 i j z x v b n m c # 创建第二组元素zhz1

(integer) 1

127.0.0.1:6379> pfcount zhz1 # 统计zhz1元素的基数数量

(integer) 9

127.0.0.1:6379> pfmerge zhz2 zhz zhz1 # 合并两组 zhz zhz1->zhz2 并集

OK

127.0.0.1:6379> pfcount zhz2 # 统计zhz2元素的基数数量,看并集的数量

(integer) 153.3、Bitmap(位存储)=》布隆过滤器=〉布谷鸟过滤器

使用场景:

- 统计用户信息(活跃,不活跃;登录,未登录;打卡,365打卡!;)

- 两种状态的都可以用Bitmap

定义:位图,数据结构!都是操作二进制位来进行记录,就只有0和1两个状态

365天=365bit 1字节=8bit 想当于46个字节左右

使用:

java

setbit 设置状态

getbit 获得状态

bitcount 获得状态为1的值

下面表示 周日,周一,周二,周四,周六打卡(1)了,周三,周五不打卡(0)

127.0.0.1:6379> setbit login 0 1

(integer) 0

127.0.0.1:6379> setbit login 1 1

(integer) 0

127.0.0.1:6379> setbit login 2 1

(integer) 0

127.0.0.1:6379> setbit login 3 0

(integer) 0

127.0.0.1:6379> setbit login 4 1

(integer) 0

127.0.0.1:6379> setbit login 5 0

(integer) 0

127.0.0.1:6379> setbit login 6 1

(integer) 0

127.0.0.1:6379> getbit login 1

(integer) 1

127.0.0.1:6379> getbit login 5

(integer) 0

127.0.0.1:6379> bitcount login 统计全勤

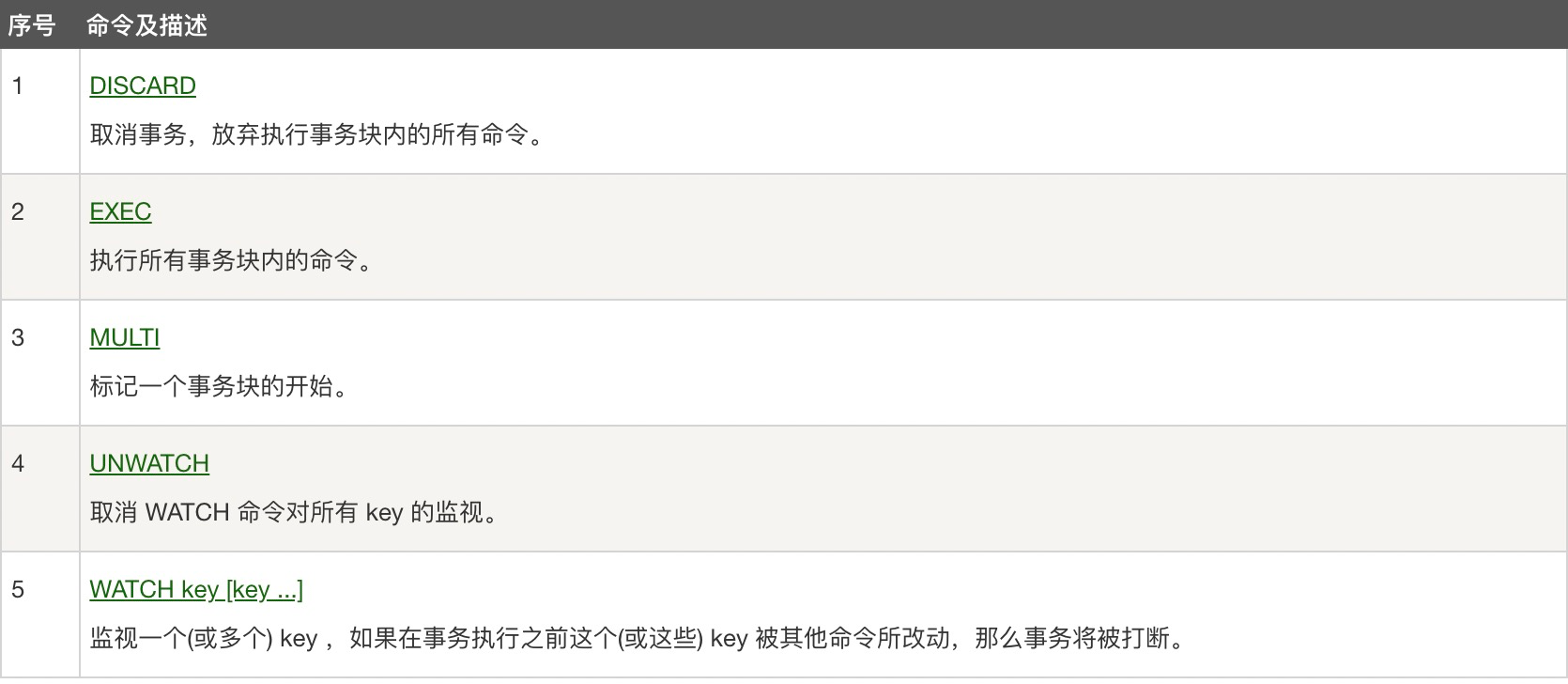

(integer) 54、事务

- redis单条命令是保证原子性的,但是事务不保证原子性!

- redis的事务本质:一组命令的集合!一个事务中的所有命令都会被序列化,在食物执行过程中,会按照顺序执行!

- 一次性,顺序性,排他性!执行一些列的命令

- Redis事务没有隔离级别的概念

- 所有的命令在事务中,并没有直接被执行!只有发起执行命令的时候才会执行!Exec

redis的事务:

- 开启事务(multi)

- 命令入队(...)

- 执行事务(exec)

测试:

正常执行事务!!!

java

127.0.0.1:6379> multi # 开启事务

OK

127.0.0.1:6379(TX)> set k1 v1 # 命令入队

QUEUED

127.0.0.1:6379(TX)> set k2 v2

QUEUED

127.0.0.1:6379(TX)> set k3 v3

QUEUED

127.0.0.1:6379(TX)> get k1

QUEUED

127.0.0.1:6379(TX)> get k3

QUEUED

127.0.0.1:6379(TX)> exec # 执行事务

1) OK

2) OK

3) OK

4) "vi"

5) "v3"放弃事务!!!!

java

127.0.0.1:6379> multi # 开启事务

OK

127.0.0.1:6379(TX)> set k1 v1

QUEUED

127.0.0.1:6379(TX)> set k2 v2

QUEUED

127.0.0.1:6379(TX)> set k4 v4

QUEUED

127.0.0.1:6379(TX)> discard # 放弃事务

OK

127.0.0.1:6379> get k4 # 事务列表中的命令都不会执行

(nil)编译型异常(代码有问题!命令有错!),事务中的所有命令都不会被执行!!

java

127.0.0.1:6379> multi

OK

127.0.0.1:6379(TX)> set z1 1

QUEUED

127.0.0.1:6379(TX)> set z2 2

QUEUED

127.0.0.1:6379(TX)> setget z3 2 # 命令错误

(error) ERR unknown command `setget`, with args beginning with: `z3`, `2`,

127.0.0.1:6379(TX)> set z4 4

QUEUED

127.0.0.1:6379(TX)> exec # 执行事务出错

(error) EXECABORT Transaction discarded because of previous errors.

127.0.0.1:6379> get z4 # 所有的命令都不会执行

(nil)运行时异常(1/0),如果事务队列中存在语法错误,那么执行命令的时候,其他命令时可以正常执行的,错误命令抛出异常!!

java

127.0.0.1:6379> set zhz "hello"

OK

127.0.0.1:6379> multi

OK

127.0.0.1:6379(TX)> incr zhz 会执行失败

QUEUED

127.0.0.1:6379(TX)> set zhz1 zhz

QUEUED

127.0.0.1:6379(TX)> set zhz2 zhz1

QUEUED

127.0.0.1:6379(TX)> exec

1) (error) ERR value is not an integer or out of range 虽然第一条失败了,但是后面的都成功了

2) OK

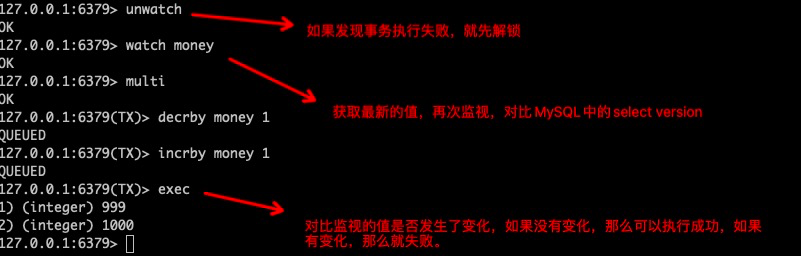

3) OK监控!Watch

- 乐观锁

- 认为什么时候都不会出问题,所以不会加锁!更新数据的时候会去判断一下,在此期间会不会有人改过数据

- 第一步,获取version

- 第二步,更新的时候比较version

- 悲观锁

- 认为什么时候都会出问题,无论做什么都加锁

Redis监控测试

正常执行成功!

java

127.0.0.1:6379> set money 100

OK

127.0.0.1:6379> set out 0

OK

127.0.0.1:6379> watch money # 监控money对象

OK

127.0.0.1:6379> multi # 事务正常结束,数据期间没有发生变动,正常执行成功

OK

127.0.0.1:6379(TX)> decrby money 20

QUEUED

127.0.0.1:6379(TX)> incrby out 20

QUEUED

127.0.0.1:6379(TX)> exec

1) (integer) 80

2) (integer) 20测试多线程修改值,使用watch,可以当作redis的乐观锁操作!

java

127.0.0.1:6379> set money 100

OK

127.0.0.1:6379> set out 0

OK

127.0.0.1:6379> watch money # 监控money对象

OK

127.0.0.1:6379> multi # 事务正常结束,数据期间没有发生变动,正常执行成功

OK

127.0.0.1:6379(TX)> decrby money 20

QUEUED

127.0.0.1:6379(TX)> incrby out 20

QUEUED

127.0.0.1:6379(TX)> exec

1) (integer) 80

2) (integer) 20如果修改失败,会获取最新的值

5、管道

5.1、意思

- 客户端可以一次性发送多个请求而不用等待服务器的响应,待所有命令都发送完后再一次性读取服务的响应,这样可以极大的降低多条命令执行的网络传输开销,管道执行多条命令的网络开销实际上只相当于一次命令执行的网络开销。所以并不是打包的命令越多越好。 pipeline中发送的每个command都会被server立即执行,如果执行失败,将会在此后的响应中得到信息;也就是pipeline并不是表达“所有command都一起成功”的语义,管道中前面命令失败,后面命令 不会有影响,继续执行。

5.2、缺点

- 如果命令太多,就阻塞线程,导致

- 用pipeline方式打包命令发送,redis必须在处理完所有命令前先缓存起所有命令的处理结果。打包的命令越多,缓存消耗内存也越多

- 管道不是原子的,不过redis的批量操作命令(类似mset)是原子的。

5.3、使用

5.3.1、jedis

package com.zhz.testredis.pipeline;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisPoolConfig;

import redis.clients.jedis.Pipeline;

import java.util.List;

/**

* @author zhouhengzhe

* @Description: 管道,一次网络开销,减少多次网络开销

* @date 2021/8/8上午12:18

*/

public class JedisPipeline {

public static void main(String[] args) {

JedisPoolConfig jedisPoolConfig=new JedisPoolConfig();

jedisPoolConfig.setMaxTotal(20);

jedisPoolConfig.setMaxIdle(10);

jedisPoolConfig.setMaxIdle(5);

// timeout,这里既是连接超时又是读写超时,从Jedis 2.8开始有区分connectionTimeout和soTimeout的构造函数

JedisPool jedisPool=new JedisPool(jedisPoolConfig, "localhost", 6379, 3000, "123456");

Jedis jedis = null;

try {

//从redis连接池里拿出一个连接执行命令

jedis=jedisPool.getResource();

/**

* 管道 cat redis.txt | redis-cli -h 127.0.0.1 -a password - p 6379 --pipe

*/

//报错[1, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 2, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 3, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 4, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 5, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 6, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 7, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 8, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 9, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range, 10, OK, redis.clients.jedis.exceptions.JedisDataException: ERR bit offset is not an integer or out of range]

Pipeline pl = jedis.pipelined();

for (int i = 0; i < 10; i++) {

pl.incr("plKey");

pl.set("name"+i,"zhz");

//模拟管道报错

// pl.setbit("name",-1,true);

}

List<Object> results = pl.syncAndReturnAll();

System.out.println(results);

}catch (Exception e){

e.printStackTrace();

}finally {

//注意这里不是关闭连接,在JedisPool模式下,Jedis会被归还给资源池。

if (jedis!=null){

jedis.close();

}

}

}

}6、Lua脚本

6.1、Lua学习

https://www.runoob.com/lua/lua-tutorial.html

6.2、作用

- 减少网络开销

- 原子操作

- 替代redis的事务功能:redis自带的事务功能很鸡肋,而redis的lua脚本几乎实现了常规的事务功能,官方推荐如果要使用redis的事务功能可以用redis lua替代

6.3、注意

不要在Lua脚本中出现死循环和耗时的运算,否则redis会阻塞,将不接受其他的命令, 所以使用时要注意不能出现死循环、耗时的运算。redis是单进程、单线程执行脚本。管道不会阻塞redis。

6.4、使用

6.4.1、jedis集成

package com.zhz.testredis.lua;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisPoolConfig;

import redis.clients.jedis.Pipeline;

import java.util.Arrays;

import java.util.Collections;

import java.util.List;

/**

* @author zhouhengzhe

* @Description: Lua脚本

* @date 2021/8/8上午12:28

*/

public class JedisLuaSingle {

public static void main(String[] args) {

JedisPoolConfig jedisPoolConfig=new JedisPoolConfig();

jedisPoolConfig.setMaxTotal(20);

jedisPoolConfig.setMaxIdle(10);

jedisPoolConfig.setMaxIdle(5);

// timeout,这里既是连接超时又是读写超时,从Jedis 2.8开始有区分connectionTimeout和soTimeout的构造函数

JedisPool jedisPool=new JedisPool(jedisPoolConfig, "localhost", 6379, 3000, "123456");

Jedis jedis = null;

try {

//从redis连接池里拿出一个连接执行命令

jedis=jedisPool.getResource();

/**

* lua脚本示例,模拟一个商品减库存的原子操作,当作一条命令,多线程会等待,分布式锁

*/

//初始化商品1的库存

jedis.set("product_stock_1","15");

String script=" local count = redis.call('get', KEYS[1]) " +//get product_stock_1

" local a = tonumber(count) " + //转整数

" local b = tonumber(ARGV[1]) " +

" if a >= b then " +

" redis.call('set', KEYS[1], a-b) " +

//模拟语法报错回滚操作

//" bb = 0 " +

" return 1 " +

" end " +

" return 0 ";

Object obj = jedis.eval(script, Collections.singletonList("product_stock_1"), Collections.singletonList("10"));

System.out.println(obj);

}catch (Exception e){

e.printStackTrace();

}finally {

//注意这里不是关闭连接,在JedisPool模式下,Jedis会被归还给资源池。

if (jedis!=null){

jedis.close();

}

}

}

}7、布隆过滤器

7.1、什么是布隆过滤器

- 布隆过滤器(Bloom Filter)是1970年由布隆提出的。它实际上是一个很长的二进制向量(位图)和一系列随机映射函数(哈希函数)。布隆过滤器可以用于检索一个元素是否在一个集合中。它的优点是空间效率和查询时间都远远超过一般的算法,缺点是有一定的误识别率和删除困难。

7.2、原理

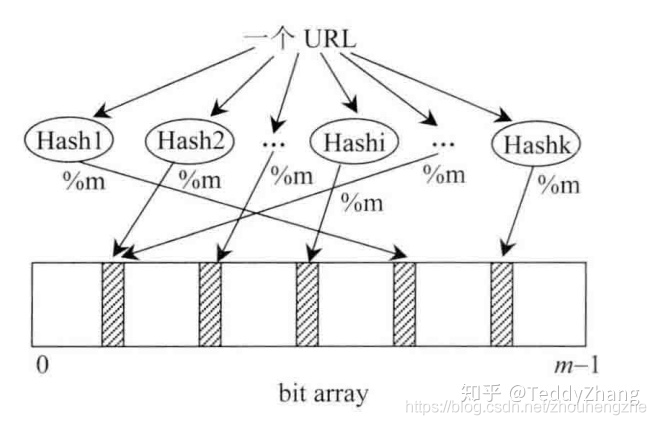

- 一个key取多次hash

- 布隆过滤器广泛应用于网页黑名单系统、垃圾邮件过滤系统、爬虫网址判重系统等,有人会想,我直接将网页URL存入数据库进行查找不就好了,或者建立一个哈希表进行查找不就OK了。

- 当数据量小的时候,这么思考是对的,但如果整个网页黑名单系统包含100亿个网页URL,在数据库查找是很费时的,并且如果每个URL空间为64B,那么需要内存为640GB,一般的服务器很难达到这个需求。

- 那么,在这种内存不够且检索速度慢的情况下,不妨考虑下布隆过滤器,但业务上要可以忍受判断失误率。

7.3、位图(bitmap)

- 布隆过滤器其中重要的实现就是位图的实现,也就是位数组,并且在这个数组中每一个位置只占有1个bit,而每个bit只有0和1两种状态。如上图bitarray所示!bitarray也叫bitmap,大小也就是布隆过滤器的大小。

- 假设一种有k个哈希函数,且每个哈希函数的输出范围都大于m,接着将输出值对k取余(%m),就会得到k个[0, m-1]的值,由于每个哈希函数之间相互独立,因此这k个数也相互独立,最后将这k个数对应到bitarray上并标记为1(涂黑)。

- 等判断时,将输入对象经过这k个哈希函数计算得到k个值,然后判断对应bitarray的k个位置是否都为1(是否标黑),如果有一个不为黑,那么这个输入对象则不在这个集合中,也就不是黑名单了!如果都是黑,那说明在集合中,但有可能会误,由于当输入对象过多,而集合也就是bitarray过小,则会出现大部分为黑的情况,那样就容易发生误判!因此使用布隆过滤器是需要容忍错误率的,即使很低很低!

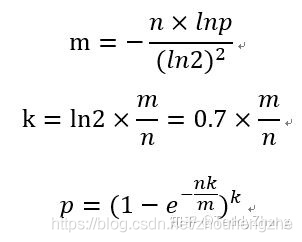

7.4、布隆过滤器重要参数计算

假设输入对象个数为n,bitarray大小(也就是布隆过滤器大小)为m,所容忍的误判率p和哈希函数的个数k。计算公式如下:(小数向上取整)

- 由于我们计算的m和k可能是小数,那么需要经过向上取整,此时需要重新计算误判率p!

- 假设一个网页黑名单有URL为100亿,每个样本为64B,失误率为0.01%,经过上述公式计算后,需要布隆过滤器大小为25GB,这远远小于使用哈希表的640GB的空间。

- 并且由于是通过hash进行查找的,所以基本都可以在O(1)的时间完成!

7.5、使用

7.5.1、jedis集成

package com.zhz.testredis.bloomfilter;

import com.google.common.base.Preconditions;

import com.google.common.hash.Funnel;

import com.google.common.hash.Hashing;

public class BloomFilterHelper<T> {

private int numHashFunctions;

private int bitSize;

private Funnel<T> funnel;

//初始化BloomFilterHelper对象

public BloomFilterHelper(Funnel<T> funnel, int expectedInsertions, double fpp) {

//检查boolean是否为真。 用作方法中检查参数

Preconditions.checkArgument(funnel != null, "funnel不能为空");

this.funnel = funnel;

bitSize = optimalNumOfBits(expectedInsertions, fpp);

numHashFunctions = optimalNumOfHashFunctions(expectedInsertions, bitSize);

}

//偏移量

public int[] murmurHashOffset(T value) {

int[] offset = new int[numHashFunctions];

long hash64 = Hashing.murmur3_128().hashObject(value, funnel).asLong();

int hash1 = (int) hash64;

int hash2 = (int) (hash64 >>> 32);

for (int i = 1; i <= numHashFunctions; i++) {

int nextHash = hash1 + i * hash2;

if (nextHash < 0) {

nextHash = ~nextHash;

}

offset[i - 1] = nextHash % bitSize;

}

return offset;

}

/**

* 计算bit数组长度

*/

private int optimalNumOfBits(long n, double p) {

if (p == 0) {

p = Double.MIN_VALUE;

}

return (int) (-n * Math.log(p) / (Math.log(2) * Math.log(2)));

}

/**

* 计算hash方法执行次数

*/

private int optimalNumOfHashFunctions(long n, long m) {

return Math.max(1, (int) Math.round((double) m / n * Math.log(2)));

}

}package com.zhz.testredis.bloomfilter;

import redis.clients.jedis.Jedis;

/**

* @author zhouhengzhe

* @Description: redis的布隆过滤器

* @date 2021/8/8上午3:40

*/

public class RedisBloomFilter<T> {

private Jedis jedis;

public RedisBloomFilter(Jedis jedis) {

this.jedis = jedis;

}

/**

* 根据给定的布隆过滤器添加值

*/

public <T> void addByBloomFilter(BloomFilterHelper<T> bloomFilterHelper, String key, T value) {

int[] offset = bloomFilterHelper.murmurHashOffset(value);

for (int i : offset) {

//redisTemplate.opsForValue().setBit(key, i, true);

jedis.setbit(key, i, true);

}

}

/**

* 根据给定的布隆过滤器判断值是否存在

*/

public <T> boolean includeByBloomFilter(BloomFilterHelper<T> bloomFilterHelper, String key, T value) {

int[] offset = bloomFilterHelper.murmurHashOffset(value);

for (int i : offset) {

//if (!redisTemplate.opsForValue().getBit(key, i)) {

if (!jedis.getbit(key, i)) {

return false;

}

}

return true;

}

}package com.zhz.testredis.bloomfilter;

import com.google.common.hash.Funnels;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisPoolConfig;

import java.nio.charset.Charset;

/**

* @author zhouhengzhe

* @Description: 测试布隆过滤器

* @date 2021/8/8上午3:35

*/

public class JedisBloomFilterTest {

public static void main(String[] args) {

JedisPoolConfig jedisPoolConfig = new JedisPoolConfig();

jedisPoolConfig.setMaxTotal(10);

jedisPoolConfig.setMaxIdle(5);

jedisPoolConfig.setMinIdle(2);

// timeout,这里既是连接超时又是读写超时,从Jedis 2.8开始有区分connectionTimeout和soTimeout的构造函数

JedisPool jedisPool = new JedisPool(jedisPoolConfig, "localhost", 6379, 3000, "123456");

Jedis jedis = null;

try {

//从redis连接池里拿出一个连接执行命令

jedis = jedisPool.getResource();

//******* Redis测试布隆方法 ********

BloomFilterHelper<CharSequence> bloomFilterHelper = new BloomFilterHelper<CharSequence>(Funnels.stringFunnel(Charset.defaultCharset()), 1000, 0.1);

RedisBloomFilter<Object> redisBloomFilter = new RedisBloomFilter<Object>(jedis);

int j = 0;

for (int i = 0; i < 100; i++) {

redisBloomFilter.addByBloomFilter(bloomFilterHelper, "bloom", i+"");

}

for (int i = 0; i < 10000; i++) {

boolean result = redisBloomFilter.includeByBloomFilter(bloomFilterHelper, "bloom", i+"");

if (!result) {

j++;

}

}

System.out.println("漏掉了" + j + "个");

} catch (Exception e) {

e.printStackTrace();

} finally {

//注意这里不是关闭连接,在JedisPool模式下,Jedis会被归还给资源池。

if (jedis != null)

jedis.close();

}

}

}7.5.2、springboot集成

7.5.2.1、pom

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson</artifactId>

<version>3.16.0</version>

</dependency>7.5.2.2、config

package com.zhz.config;

import org.redisson.Redisson;

import org.redisson.config.Config;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @author zhouhengzhe

* @Description: redission的配置

* @date 2021/8/8下午7:34

*/

@Configuration

public class RedissonConfig {

@Bean

public Redisson redisson(){

//单机模式

Config config = new Config();

config.useSingleServer().setAddress("redis://localhost:6379").setDatabase(0).setPassword("123456");

return (Redisson) Redisson.create(config);

}

}7.5.2.3、使用

package com.zhz;

import org.redisson.Redisson;

import org.redisson.api.RBloomFilter;

import org.redisson.api.RedissonClient;

import org.redisson.config.Config;

/**

* @author zhouhengzhe

* @Description: 布隆过滤器

* @date 2021/8/8下午8:11

*/

public class RedissonBloomFilter {

public static void main(String[] args) {

Config config = new Config();

config.useSingleServer().setAddress("redis://localhost:6379").setPassword("123456");

//构造Redisson

RedissonClient redisson = Redisson.create(config);

RBloomFilter<String> bloomFilter = redisson.getBloomFilter("nameList");

//初始化布隆过滤器:预计元素为100000000L,误差率为3%,根据这两个参数会计算出底层的bit数组大小

bloomFilter.tryInit(100000L,0.03);

//将zhuge插入到布隆过滤器中

bloomFilter.add("zhz");

bloomFilter.add("hyf");

//判断下面号码是否在布隆过滤器中

System.out.println(bloomFilter.contains("lhl"));//false

System.out.println(bloomFilter.contains("sfy"));//false

System.out.println(bloomFilter.contains("zhz"));//true

}

}8、分布式锁

8.1、为什么使用!

- 我们在开发应用的时候,如果需要对某一个共享变量进行多线程同步访问的时候,可以使用我们学到的java多线程解决。

- 注意这是单机应用,也就是所有的请求都会分配到当前服务器的jvm内部,然后映射为操作系统的线程进行处理,而这个共享变量只是在这个jvm内部的一块内存空间。

- 为了保证一个方法或者属性在高并发情况下的同一时间只能被同一个线程执行,在传统单机应用单机部署的情况下,可以使用java并发处理的相关API进行互斥控制(如ReentrantLock或Synchronized)。

- 在单机环境中,java中提供了很多并发处理相关的API。

- 但是随着业务发展的需要,原单体单机部署的系统被演化成分布式集群系统后,由于分布式系统多线程、多进程并且分布在不同机器上,这将使原单机部署情况下的并发控制锁策略失效,单纯的java API并不能提供分布式锁的能力。

- 为了解决这个问题,就需要一种跨JVM的互斥机制来控制共享资源的访问,这就是分布式锁要解决的问题。

8.2、分布式锁具备的条件

- 在分布式系统环境下,一个方法在同一时间只能被一个机器的的一个线程执行;

- 高可用的获取锁与释放锁;

- 高性能的获取锁与释放锁;

- 具备可重入特性;

- 具备锁失效机制,防止死锁;

- 具备非阻塞锁特性,即没有获取到锁将直接返回获取锁失败。

8.3、实现方式

8.3.1、基于数据库的实现方式

实现

唯一索引

缺点:

(1)因为是基于数据库实现的,数据库的可用性和性能将直接影响分布式锁的可用性及性能,所以,数据库需要双机部署、数据同步、主备切换。

(2)不具备可重入的特性,因为同一个线程在释放锁之前,行数据一直存在,无法再次成功插入数据,所以,需要在表中新增一列,用于记录当前获取到锁的机器和线程信息,在再次获取锁的时候,先查询表中机器和线程信息是否和当前机器和线程信息相同,若相同则直接获取锁;

(3)没有锁失效机制,因为有可能出现成功插入数据后,服务器宕机了,对应的数据没有被删除,当服务恢复后一直获取不到锁,所以,需要在锁中新增一列,用于记录失效时间,并且需要有定时任务清除这些失效的数据;

(4)不具备阻塞锁特性,获取不到锁直接返回失败,所以需要优化获取逻辑,循环多次去获取。

8.3.2、基于redis的实现方式

8.4.2.1、redis原生实现

8.4.2.1.1、原理

(1)获取锁的时候,使用setnx加锁,并使用expire命令给锁加一个超时时间,超过该时间则自动释放锁,锁的value值为一个随机生成的UUID,通过此在释放锁的时候进行判断。

(2)获取锁的时候还设置一个获取的超时时间,若超过这个时间则放弃获取锁。

(3)释放锁的时候,通过UUID判断是不是该锁,若是该锁,则执行delete进行锁释放。

8.4.2.1.2、springboot实现

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>package com.zhz.splock;

import com.zhz.constant.RedisLockKeyConstant;

import lombok.extern.slf4j.Slf4j;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.data.redis.core.StringRedisTemplate;

import java.util.UUID;

import java.util.concurrent.TimeUnit;

/**

* @author zhouhengzhe

* @Description: springboot集成redis锁

* @date 2021/8/8下午4:46

*/

@SpringBootTest

@Slf4j

public class SpringBootRedisLock {

@Autowired

private StringRedisTemplate stringRedisTemplate;

/**

* 简单的过期时间与加锁两部曲

*/

@Test

public void testLockSectionExpire(){

String clientId = UUID.randomUUID().toString();

String lockKey= RedisLockKeyConstant.USER_PRODUCT +"id";//id指的是用户或者订单,商品等的id,前面的一般是固定标识

try {

//分段设置过期时间,会出现锁永远不释放问题

Boolean flag = stringRedisTemplate.opsForValue().setIfAbsent(lockKey, clientId);//对应jedis.setnx(k,v)

stringRedisTemplate.expire(lockKey,30, TimeUnit.SECONDS);//30秒

if (!flag){

//这里可以返回,

log.info("加锁失败");

return ;

}

//业务逻辑处理

}finally {

if (("zhz").equals(stringRedisTemplate.opsForValue().get(lockKey))){

stringRedisTemplate.delete(lockKey);

}

}

}

/**

* 一步到位

*/

@Test

public void testLockAlongWithExpire(){

String clientId = UUID.randomUUID().toString();

String lockKey= RedisLockKeyConstant.USER_PRODUCT +"id";//id指的是用户或者订单,商品等的id,前面的一般是固定标识

try {

//分段设置过期时间,会出现锁永远不释放问题

Boolean flag = stringRedisTemplate.opsForValue().setIfAbsent(lockKey, clientId,30,TimeUnit.SECONDS);//对应jedis.setnx(k,v)

if (!flag){

//这里可以返回,

log.info("加锁失败");

return ;

}

//业务逻辑处理

}finally {

if (("zhz").equals(stringRedisTemplate.opsForValue().get(lockKey))){

stringRedisTemplate.delete(lockKey);

}

}

}

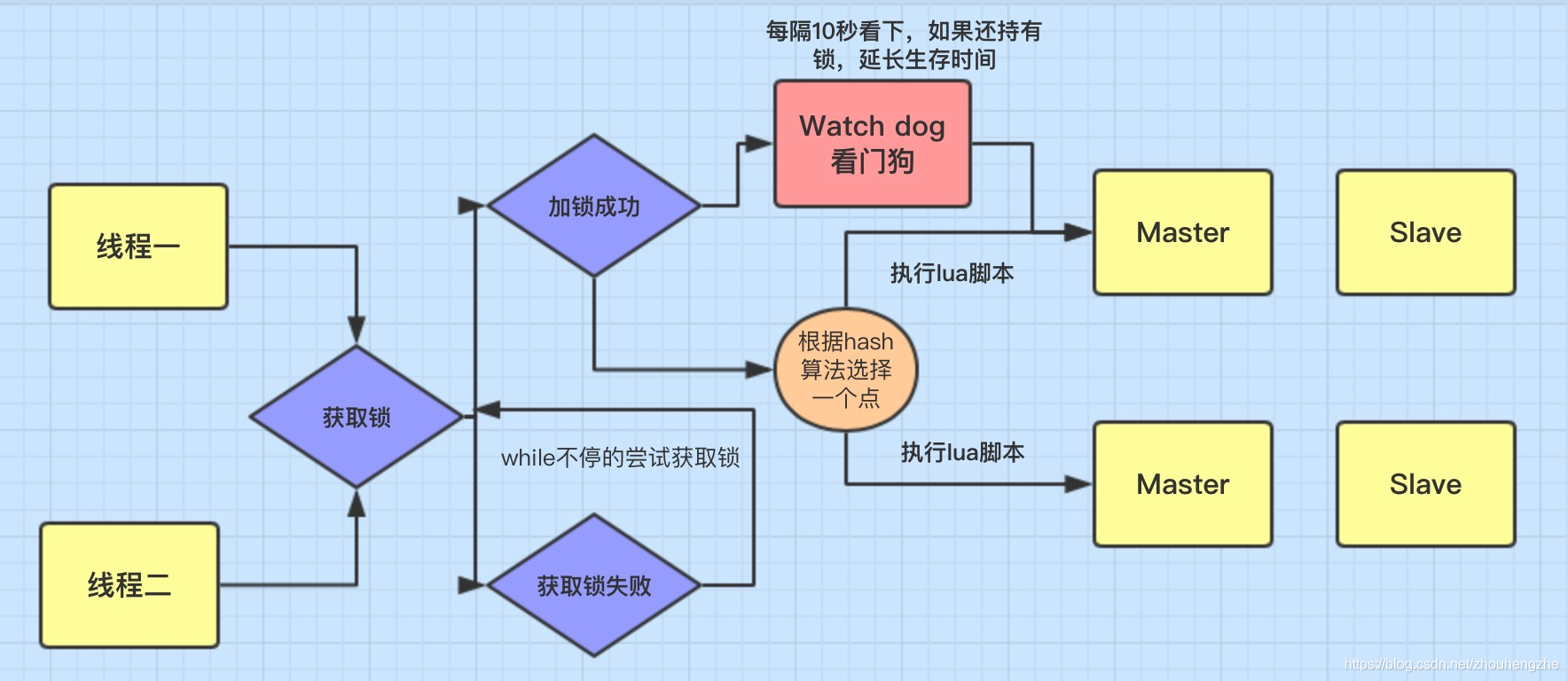

}8.4.2.1.3、springboot+redisson实现

原理:

实现

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson</artifactId>

<version>3.16.0</version>

</dependency>package com.zhz.redisson;

import cn.hutool.core.lang.Console;

import cn.hutool.core.thread.ConcurrencyTester;

import cn.hutool.core.thread.ThreadUtil;

import cn.hutool.core.util.RandomUtil;

import com.zhz.constant.RedisLockKeyConstant;

import lombok.extern.slf4j.Slf4j;

import org.junit.jupiter.api.Test;

import org.redisson.Redisson;

import org.redisson.RedissonRedLock;

import org.redisson.api.RLock;

import org.redisson.api.RReadWriteLock;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.data.redis.core.StringRedisTemplate;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicInteger;

/**

* @author zhouhengzhe

* @Description: redission的锁使用

* @date 2021/8/8下午7:31

*/

@SpringBootTest

@Slf4j

public class SpringBootRedissonLockTest {

@Autowired

private StringRedisTemplate stringRedisTemplate;

@Autowired

private Redisson redisson;

public AtomicInteger counter=new AtomicInteger(10);

/**

* redisson实现,事实证明, tryLock一般用于特定满足需求的场合,但不建议作为一般需求的分布式锁,

* 一般分布式锁建议用void lock(long leaseTime, TimeUnit unit)。因为从性能上考虑,在高并发情况下后者效率是前者的好几倍

* redisson的锁默认时间是30秒自动解锁

*/

@Test

public void testLockForLock(){

ConcurrencyTester tester = ThreadUtil.concurrencyTest(100, () -> {

// 测试的逻辑内容

String lockKey= RedisLockKeyConstant.USER_PRODUCT +"id";//id指的是用户或者订单,商品等的id,前面的一般是固定标识

RLock redissonLock = redisson.getLock(lockKey);

try {

//加锁

redissonLock.lock();

//业务处理

System.out.println("给"+Thread.currentThread().getName()+"加锁:"+counter.decrementAndGet());

}finally {

redissonLock.unlock();

}

Console.log("{} test finished, delay: {}", Thread.currentThread().getName(), lockKey);

});

// 获取总的执行时间,单位毫秒

Console.log(tester.getInterval());

}

/**

* RedLock方案相比普通的Redis分布式锁方案可靠性确实大大提升。但是,任何事情都具有两面性,因为我们的业务一般只需要一个Redis Cluster,

* 或者一个Sentinel,但是这两者都不能承载RedLock的落地。如果你想要使用RedLock方案,

* 还需要专门搭建一套环境。所以,如果不是对分布式锁可靠性有极高的要求(比如金融场景),不太建议使用RedLock方案

*/

@Test

public void testLockForRedLock(){

String lockKey= RedisLockKeyConstant.USER_PRODUCT +"id";//id指的是用户或者订单,商品等的id,前面的一般是固定标识

//这里需要自己实例化不同redis实例的redisson客户端连接,这里只是伪代码用一个redisson客户端简化了

RLock lock1 = redisson.getLock(lockKey);

RLock lock2 = redisson.getLock(lockKey);

RLock lock3 = redisson.getLock(lockKey);

/**

* 根据多个 RLock 对象构建 RedissonRedLock (最核心的差别就在这里)

*/

RedissonRedLock redLock = new RedissonRedLock(lock1, lock2, lock3);

try {

/**

* waitTimeout 尝试获取锁的最大等待时间,超过这个值,则认为获取锁失败

* leaseTime 锁的持有时间,超过这个时间锁会自动失效(值应设置为大于业务处理的时间,确保在锁有效期内业务能处理完)

* 加锁

*/

boolean res = redLock.tryLock(10, 30, TimeUnit.SECONDS);

if (res) {

//成功获得锁,在这里处理业务

}

} catch (InterruptedException e) {

throw new RuntimeException("获取锁失败");

} finally {

//无论如何, 最后都要解锁

redLock.unlock();

}

}

@Test

public void testLockForRReadWriteLockInReadLock(){

String lockKey= RedisLockKeyConstant.USER_PRODUCT +"id";//id指的是用户或者订单,商品等的id,前面的一般是固定标识

RReadWriteLock readWriteLock = redisson.getReadWriteLock(lockKey);

RLock rLock = readWriteLock.readLock();

rLock.lock();

//处理业务,正常的get方法

rLock.unlock();

}

@Test

public void testLockForRReadWriteLockInWriteLock(){

String lockKey= RedisLockKeyConstant.USER_PRODUCT +"id";//id指的是用户或者订单,商品等的id,前面的一般是固定标识

RReadWriteLock readWriteLock = redisson.getReadWriteLock(lockKey);

RLock writeLock = readWriteLock.writeLock();

writeLock.lock();

//业务处理,删除或者修改都行

System.out.println("修改操作。。。。。。。");

stringRedisTemplate.delete("stock");

writeLock.unlock();

}

}8.3.3、基于ZooKeeper的实现方式

实现

(1)创建一个目录mylock;

(2)线程A想获取锁就在mylock目录下创建临时顺序节点;

(3)获取mylock目录下所有的子节点,然后获取比自己小的兄弟节点,如果不存在,则说明当前线程顺序号最小,获得锁;

(4)线程B获取所有节点,判断自己不是最小节点,设置监听比自己小的节点;

(5)线程A处理完,删除自己的节点,线程B监听到变更事件,判断自己是不是最小节点,如果是则获得锁。

优点: 具备高可用、可重入、阻塞锁特性,可解决失效死锁问题。

缺点: 因为需要频繁的创建和删除节点,性能上不如redis方式。

9、实战

9.1、Jedis连接Redis

9.1.1、pom依赖

java

<!-- https://mvnrepository.com/artifact/redis.clients/jedis -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>3.6.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.72</version>

</dependency>9.1.2、测试代码

9.1.2.1、测试连接

java

package com.zhz.testredis;

import redis.clients.jedis.Jedis;

/**

* @author zhouhengzhe

* @Description: com.zhz.testredis

* @date 2021/7/20上午10:35

*/

public class TestPing {

public static void main(String[] args) {

Jedis jedis=new Jedis("127.0.0.1",6379 );

jedis.auth("123456");

System.out.println(jedis.ping());

}

}

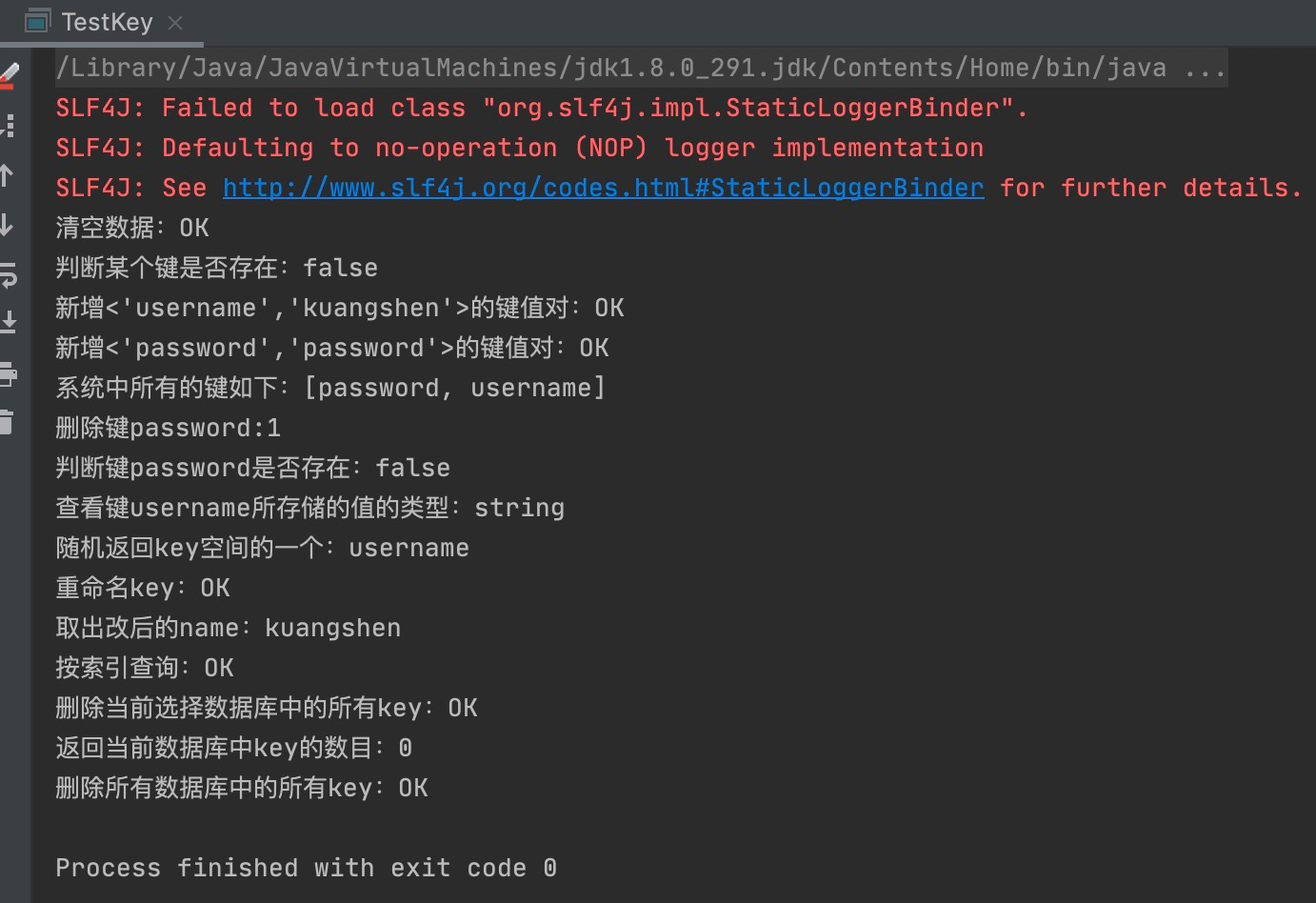

9.1.2.2、测试key

java

package com.zhz.testredis;

import redis.clients.jedis.Jedis;

import java.util.Set;

/**

* @author zhouhengzhe

* @Description: 测试key

* @date 2021/7/21上午2:52

*/

public class TestKey {

public static void main(String[] args) {

Jedis jedis = new Jedis("127.0.0.1", 6379);

jedis.auth("123456");

System.out.println("清空数据:"+jedis.flushDB());

System.out.println("判断某个键是否存在:"+jedis.exists("username"));

System.out.println("新增<'username','kuangshen'>的键值对:"+jedis.set("username", "kuangshen"));

System.out.println("新增<'password','password'>的键值对:"+jedis.set("password", "password"));

System.out.print("系统中所有的键如下:");

Set<String> keys = jedis.keys("*");

System.out.println(keys);

System.out.println("删除键password:"+jedis.del("password"));

System.out.println("判断键password是否存在:"+jedis.exists("password"));

System.out.println("查看键username所存储的值的类型:"+jedis.type("username"));

System.out.println("随机返回key空间的一个:"+jedis.randomKey());

System.out.println("重命名key:"+jedis.rename("username","name"));

System.out.println("取出改后的name:"+jedis.get("name"));

System.out.println("按索引查询:"+jedis.select(0));

System.out.println("删除当前选择数据库中的所有key:"+jedis.flushDB());

System.out.println("返回当前数据库中key的数目:"+jedis.dbSize());

System.out.println("删除所有数据库中的所有key:"+jedis.flushAll());

}

}

9.1.2.3、测试String

java

package com.zhz.testredis;

import redis.clients.jedis.Jedis;

import java.util.concurrent.TimeUnit;

/**

* @author zhouhengzhe

* @Description: 测试string

* @date 2021/7/21上午2:58

*/

public class TestString {

public static void main(String[] args) {

//创建连接

Jedis jedis=new Jedis("127.0.0.1",6379 );

//登录密码设置

jedis.auth("123456");

jedis.flushDB();

System.out.println("-------------增加数据-------------");

System.out.println(jedis.set("zhz", "xiaobai"));

System.out.println(jedis.set("zhz1", "xiaobai1"));

System.out.println(jedis.set("zhz2", "xiaobai2"));

System.out.println(jedis.set("zhz3", "xiaobai3"));

System.out.println("删除键zhz1:"+jedis.del("zhz1"));

System.out.println("获取键zhz1:"+jedis.get("zhz1"));

System.out.println("修改zhz:"+jedis.set("zhz","lisi"));

System.out.println("在zhz2后面加入值:"+jedis.append("zhz2","end"));

System.out.println("获取zhz2的值:"+jedis.get("zhz2"));

System.out.println("增加多个键值对:"+jedis.mset("k1","v1","k2","v2","k3","v3"));

System.out.println("获取多个键值对:"+jedis.mget("k1","k2","k3"));

System.out.println("删除多个键值对:"+jedis.del("k1","k2"));

System.out.println("获取多个键值对:"+jedis.mget("k1","k2","k3"));

jedis.flushDB();

System.out.println("===========新增键值对防止覆盖原先值==============");

System.out.println(jedis.setnx("key1", "value1"));

System.out.println(jedis.setnx("key2", "value2"));

System.out.println(jedis.setnx("key2", "value2-new"));

System.out.println(jedis.get("key1"));

System.out.println(jedis.get("key2"));

System.out.println("===========新增键值对并设置有效时间=============");

System.out.println(jedis.setex("key3", 2, "value3"));

System.out.println(jedis.get("key3"));

try {

//验证过期时间

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(jedis.get("key3"));

System.out.println("===========获取原值,更新为新值==========");

System.out.println(jedis.getSet("key2", "key2GetSet"));

System.out.println(jedis.get("key2"));

System.out.println("获得key2的值的字串:"+jedis.getrange("key2", 2, 4));

}

}

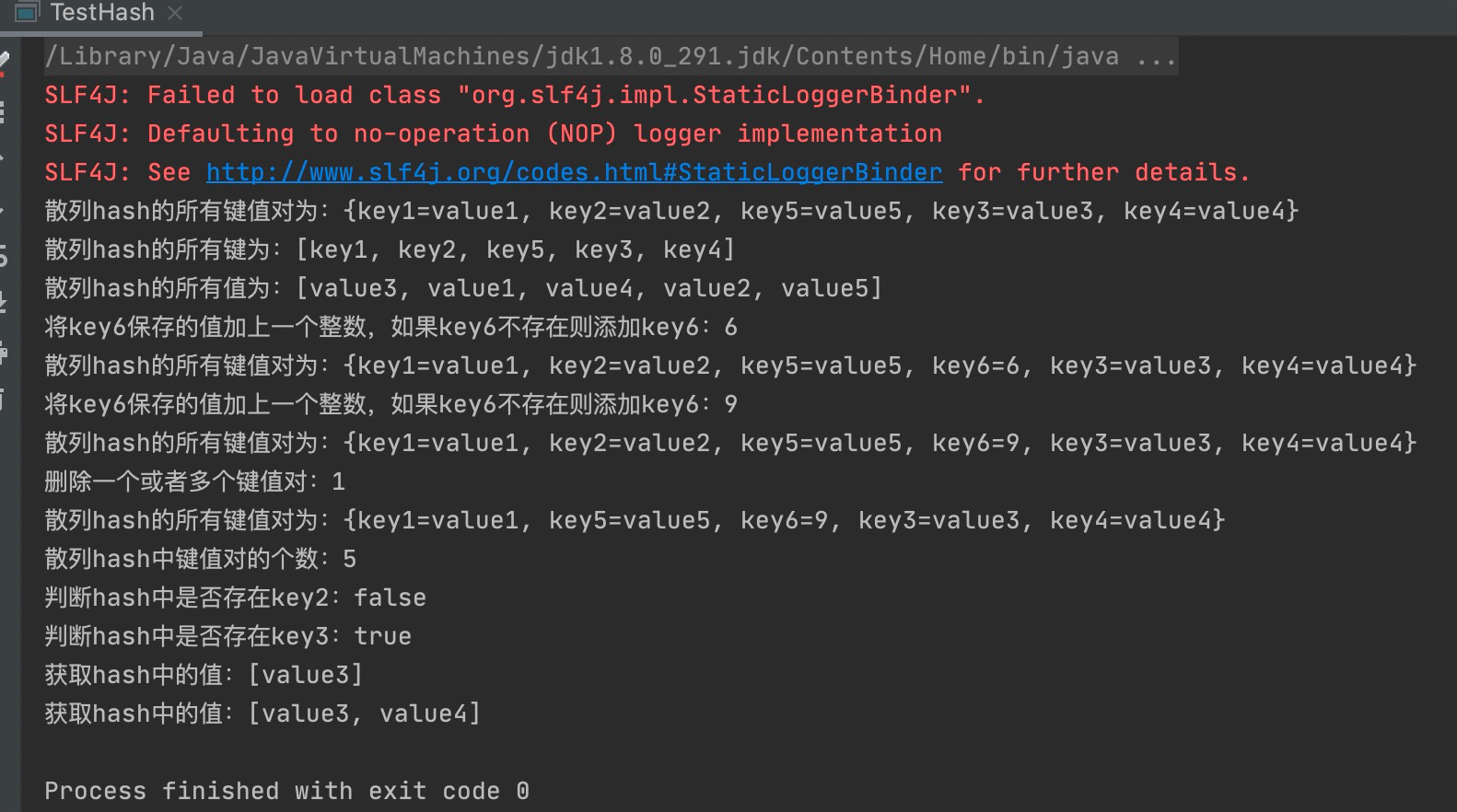

9.1.2.4、测试Hash

java

package com.zhz.testredis;

import redis.clients.jedis.Jedis;

import java.util.HashMap;

import java.util.Map;

/**

* @author zhouhengzhe

* @Description: 测试hash

* @date 2021/7/21上午2:59

*/

public class TestHash {

public static void main(String[] args) {

Jedis jedis = new Jedis("127.0.0.1", 6379);

jedis.auth("123456");

jedis.flushDB();

Map<String,String> map = new HashMap<String,String>();

map.put("key1","value1");

map.put("key2","value2");

map.put("key3","value3");

map.put("key4","value4");

//添加名称为hash(key)的hash元素

jedis.hmset("hash",map);

//向名称为hash的hash中添加key为key5,value为value5元素

jedis.hset("hash", "key5", "value5");

System.out.println("散列hash的所有键值对为:"+jedis.hgetAll("hash"));//return Map<String,String>

System.out.println("散列hash的所有键为:"+jedis.hkeys("hash"));//return Set<String>

System.out.println("散列hash的所有值为:"+jedis.hvals("hash"));//return List<String>

System.out.println("将key6保存的值加上一个整数,如果key6不存在则添加key6:"+jedis.hincrBy("hash", "key6", 6));

System.out.println("散列hash的所有键值对为:"+jedis.hgetAll("hash"));

System.out.println("将key6保存的值加上一个整数,如果key6不存在则添加key6:"+jedis.hincrBy("hash", "key6", 3));

System.out.println("散列hash的所有键值对为:"+jedis.hgetAll("hash"));

System.out.println("删除一个或者多个键值对:"+jedis.hdel("hash", "key2"));

System.out.println("散列hash的所有键值对为:"+jedis.hgetAll("hash"));

System.out.println("散列hash中键值对的个数:"+jedis.hlen("hash"));

System.out.println("判断hash中是否存在key2:"+jedis.hexists("hash","key2"));

System.out.println("判断hash中是否存在key3:"+jedis.hexists("hash","key3"));

System.out.println("获取hash中的值:"+jedis.hmget("hash","key3"));

System.out.println("获取hash中的值:"+jedis.hmget("hash","key3","key4"));

}

}

9.1.2.5、测试List

java

package com.zhz.testredis;

import redis.clients.jedis.Jedis;

/**

* @author zhouhengzhe

* @Description: 测试list

* @date 2021/7/21上午2:59

*/

public class TestList {

public static void main(String[] args) {

Jedis jedis = new Jedis("127.0.0.1", 6379);

jedis.auth("123456");

jedis.flushDB();

System.out.println("===========添加一个list===========");

jedis.lpush("collections", "ArrayList", "Vector", "Stack", "HashMap", "WeakHashMap", "LinkedHashMap");

jedis.lpush("collections", "HashSet");

jedis.lpush("collections", "TreeSet");

jedis.lpush("collections", "TreeMap");

System.out.println("collections的内容:"+jedis.lrange("collections", 0, -1));//-1代表倒数第一个元素,-2代表倒数第二个元素,end为-1表示查询全部

System.out.println("collections区间0-3的元素:"+jedis.lrange("collections",0,3));

System.out.println("===============================");

// 删除列表指定的值 ,第二个参数为删除的个数(有重复时),后add进去的值先被删,类似于出栈

System.out.println("删除指定元素个数:"+jedis.lrem("collections", 2, "HashMap"));

System.out.println("collections的内容:"+jedis.lrange("collections", 0, -1));

System.out.println("删除下表0-3区间之外的元素:"+jedis.ltrim("collections", 0, 3));

System.out.println("collections的内容:"+jedis.lrange("collections", 0, -1));

System.out.println("collections列表出栈(左端):"+jedis.lpop("collections"));

System.out.println("collections的内容:"+jedis.lrange("collections", 0, -1));

System.out.println("collections添加元素,从列表右端,与lpush相对应:"+jedis.rpush("collections", "EnumMap"));

System.out.println("collections的内容:"+jedis.lrange("collections", 0, -1));

System.out.println("collections列表出栈(右端):"+jedis.rpop("collections"));

System.out.println("collections的内容:"+jedis.lrange("collections", 0, -1));

System.out.println("修改collections指定下标1的内容:"+jedis.lset("collections", 1, "LinkedArrayList"));

System.out.println("collections的内容:"+jedis.lrange("collections", 0, -1));

System.out.println("===============================");

System.out.println("collections的长度:"+jedis.llen("collections"));

System.out.println("获取collections下标为2的元素:"+jedis.lindex("collections", 2));

System.out.println("===============================");

jedis.lpush("sortedList", "3","6","2","0","7","4");

System.out.println("sortedList排序前:"+jedis.lrange("sortedList", 0, -1));

System.out.println(jedis.sort("sortedList"));

System.out.println("sortedList排序后:"+jedis.lrange("sortedList", 0, -1));

}

}

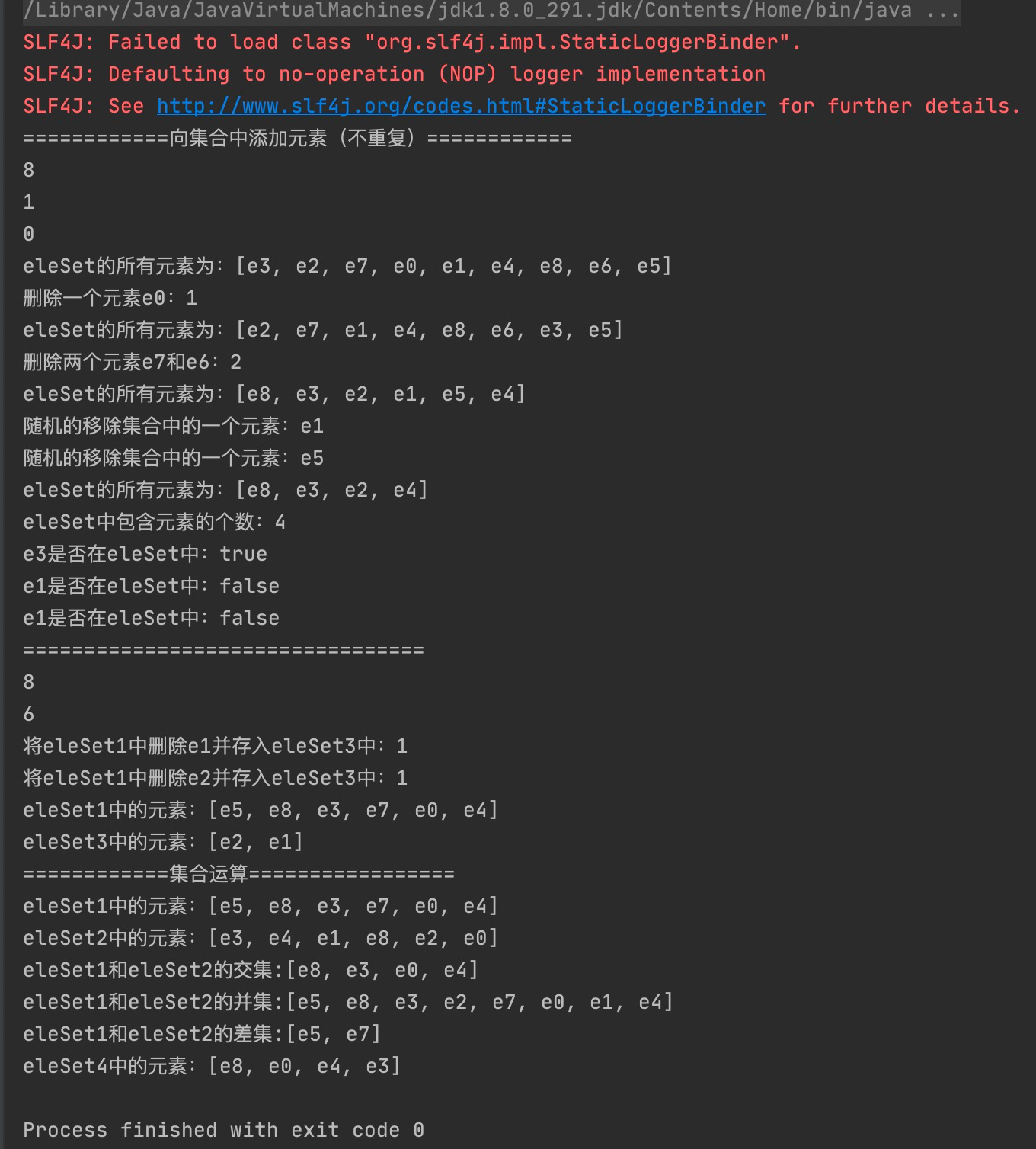

9.1.2.6、测试Set

java

package com.zhz.testredis;

import redis.clients.jedis.Jedis;

/**

* @author zhouhengzhe

* @Description: 测试Set

* @date 2021/7/21上午2:59

*/

public class TestSet {

public static void main(String[] args) {

Jedis jedis = new Jedis("127.0.0.1", 6379);

jedis.auth("123456");

jedis.flushDB();

System.out.println("============向集合中添加元素(不重复)============");

System.out.println(jedis.sadd("eleSet", "e1","e2","e4","e3","e0","e8","e7","e5"));

System.out.println(jedis.sadd("eleSet", "e6"));

System.out.println(jedis.sadd("eleSet", "e6"));

System.out.println("eleSet的所有元素为:"+jedis.smembers("eleSet"));

System.out.println("删除一个元素e0:"+jedis.srem("eleSet", "e0"));

System.out.println("eleSet的所有元素为:"+jedis.smembers("eleSet"));

System.out.println("删除两个元素e7和e6:"+jedis.srem("eleSet", "e7","e6"));

System.out.println("eleSet的所有元素为:"+jedis.smembers("eleSet"));

System.out.println("随机的移除集合中的一个元素:"+jedis.spop("eleSet"));

System.out.println("随机的移除集合中的一个元素:"+jedis.spop("eleSet"));

System.out.println("eleSet的所有元素为:"+jedis.smembers("eleSet"));

System.out.println("eleSet中包含元素的个数:"+jedis.scard("eleSet"));

System.out.println("e3是否在eleSet中:"+jedis.sismember("eleSet", "e3"));

System.out.println("e1是否在eleSet中:"+jedis.sismember("eleSet", "e1"));

System.out.println("e1是否在eleSet中:"+jedis.sismember("eleSet", "e5"));

System.out.println("=================================");

System.out.println(jedis.sadd("eleSet1", "e1","e2","e4","e3","e0","e8","e7","e5"));

System.out.println(jedis.sadd("eleSet2", "e1","e2","e4","e3","e0","e8"));

System.out.println("将eleSet1中删除e1并存入eleSet3中:"+jedis.smove("eleSet1", "eleSet3", "e1"));//移到集合元素

System.out.println("将eleSet1中删除e2并存入eleSet3中:"+jedis.smove("eleSet1", "eleSet3", "e2"));

System.out.println("eleSet1中的元素:"+jedis.smembers("eleSet1"));

System.out.println("eleSet3中的元素:"+jedis.smembers("eleSet3"));

System.out.println("============集合运算=================");

System.out.println("eleSet1中的元素:"+jedis.smembers("eleSet1"));

System.out.println("eleSet2中的元素:"+jedis.smembers("eleSet2"));

System.out.println("eleSet1和eleSet2的交集:"+jedis.sinter("eleSet1","eleSet2"));

System.out.println("eleSet1和eleSet2的并集:"+jedis.sunion("eleSet1","eleSet2"));

System.out.println("eleSet1和eleSet2的差集:"+jedis.sdiff("eleSet1","eleSet2"));//eleSet1中有,eleSet2中没有

jedis.sinterstore("eleSet4","eleSet1","eleSet2");//求交集并将交集保存到dstkey的集合

System.out.println("eleSet4中的元素:"+jedis.smembers("eleSet4"));

}

}

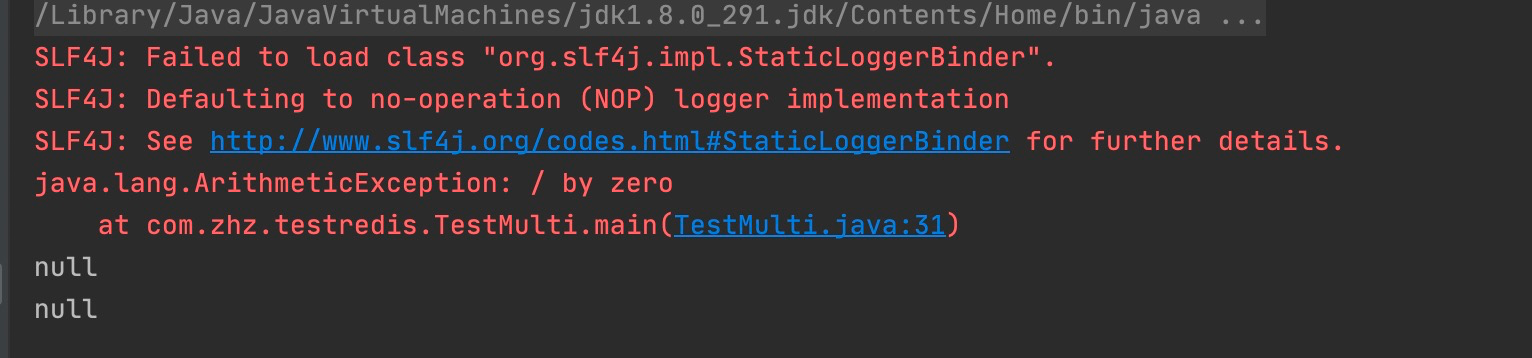

9.1.2.7、测试Multi

java

package com.zhz.testredis;

import com.alibaba.fastjson.JSONObject;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.Transaction;

/**

* @author zhouhengzhe

* @Description: 测试multi

* @date 2021/7/21上午3:00

*/

public class TestMulti {

public static void main(String[] args) {

//创建客户端连接服务端,redis服务端需要被开启

Jedis jedis = new Jedis("127.0.0.1", 6379);

jedis.auth("123456");

jedis.flushDB();

JSONObject jsonObject = new JSONObject();

jsonObject.put("hello", "world");

jsonObject.put("name", "java");

//开启事务

Transaction multi = jedis.multi();

String result = jsonObject.toJSONString();

try {

//向redis存入一条数据

multi.set("json", result);

//再存入一条数据

multi.set("json2", result);

//这里引发了异常,用0作为被除数

int i = 100 / 0;

//如果没有引发异常,执行进入队列的命令

multi.exec();

} catch (Exception e) {

e.printStackTrace();

//如果出现异常,回滚

multi.discard();

} finally {

System.out.println(jedis.get("json"));

System.out.println(jedis.get("json2"));

//最终关闭客户端

jedis.close();

}

}

}

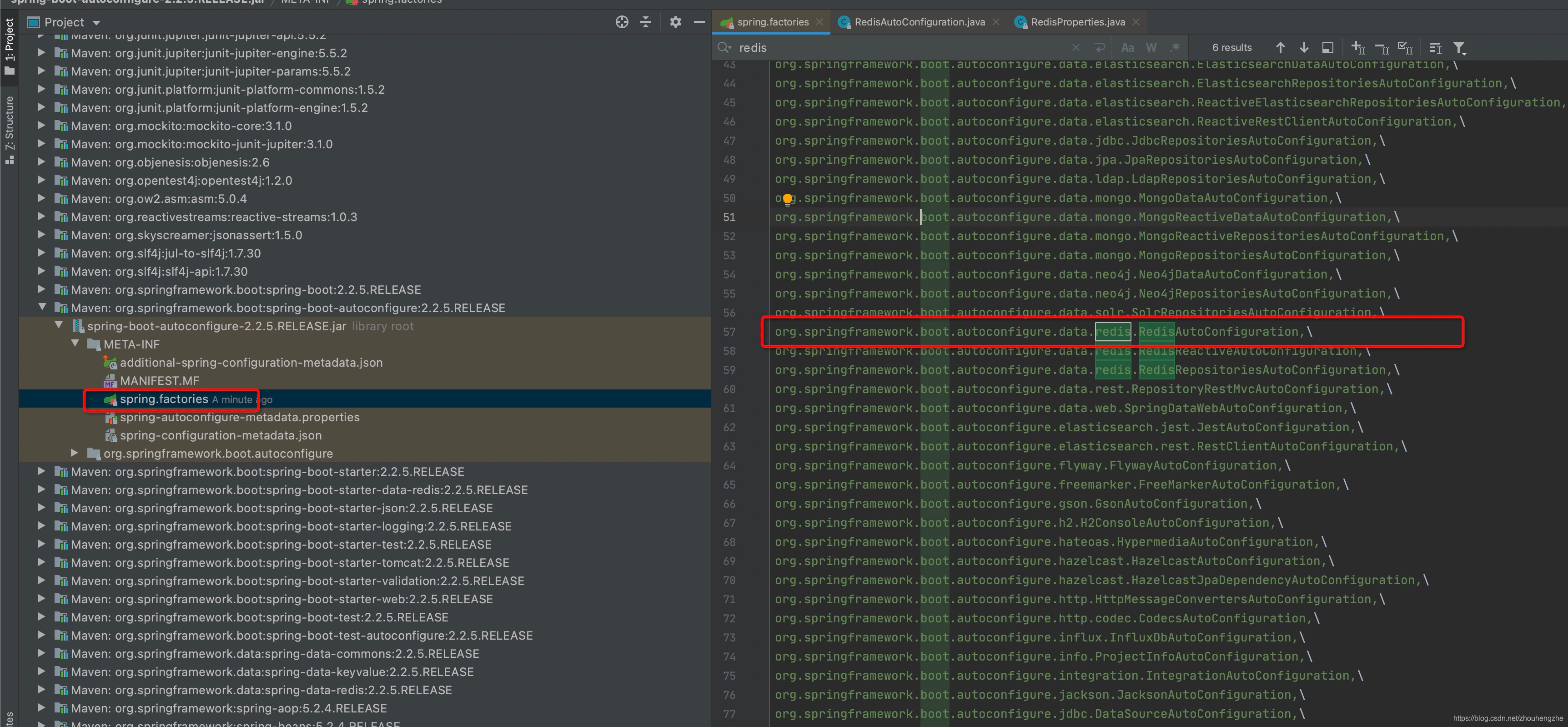

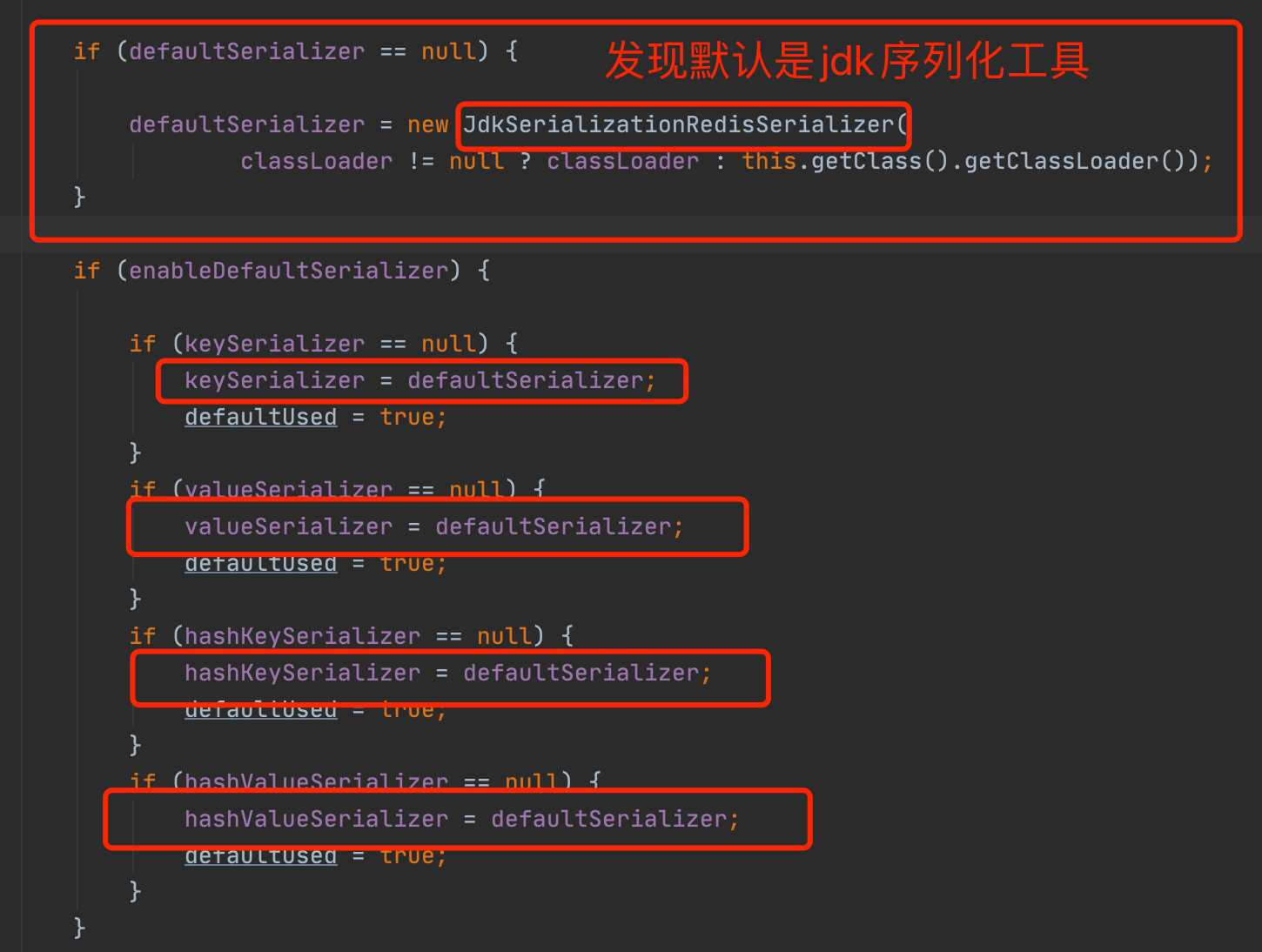

9.2、SpringBoot整合Redis

SpringBoot集成redis源码分析:

java

@Configuration(proxyBeanMethods = false)

@ConditionalOnClass(RedisOperations.class)

@EnableConfigurationProperties(RedisProperties.class)

@Import({ LettuceConnectionConfiguration.class, JedisConnectionConfiguration.class })

public class RedisAutoConfiguration {

//表示可以自定义一个redisTemplate来替换默认的!

@Bean

@ConditionalOnMissingBean(name = "redisTemplate")

public RedisTemplate<Object, Object> redisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

//默认的redis没有太多的设置,而一般redis对象都要序列化的

//两个泛型都是Object,Object类型,使用还需要强转,太麻烦

RedisTemplate<Object, Object> template = new RedisTemplate<>();

template.setConnectionFactory(redisConnectionFactory);

return template;

}

@Bean

@ConditionalOnMissingBean

public StringRedisTemplate stringRedisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

//默认给我们一个stringRedisTemplate,string比较常用

StringRedisTemplate template = new StringRedisTemplate();

template.setConnectionFactory(redisConnectionFactory);

return template;

}

}9.2.1、POM依赖

java

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>9.2.2、yml配置

java

# SpringBoot所有的配置类,默认都会有一个XXXAutoConfiguration# 自动配置类会绑定一个配置文件 XXXProperties

# 配置redis

spring:

redis:

host: 127.0.0.1

password: 123456

port: 63799.2.3、测试类(没有序列化工具)

java

package com.zhz.redis02springboot;